Timon Harz

December 18, 2024

Understanding the Mechanisms of Localized Receptive Field Emergence in Neural Networks: Insights into Deep Learning and Neural Processing

Explore how localized receptive fields are fundamental to neural network architectures and how understanding their development can optimize model efficiency. Discover the latest research and methods for improving feature extraction and network performance.

A key feature of peripheral responses in the animal nervous system is localization, where simple-cell neurons' linear receptive fields respond to specific, contiguous regions smaller than their entire input domain. However, understanding this localization is crucial for unraveling neural information processing across sensory systems. Traditional machine learning methods typically generate weight distributions spanning entire input signals, which contrasts with the localized processing strategies found in biological neural networks. This fundamental difference has driven researchers to develop artificial learning models capable of generating localized receptive fields from naturalistic stimuli.

To tackle the localization challenge in neural networks, existing research has explored various approaches. Sparse coding, independent component analysis (ICA), and related compression methods adopt a top-down strategy. These techniques optimize explicit sparsity or independence criteria within critically parameterized regimes to create efficient input signal representations. It has been shown that localized receptive fields can emerge in simple feedforward neural networks trained on data models resembling natural visual inputs. Computational simulations reveal that these networks develop increased sensitivity to higher-order input statistics, with even single neurons learning localized receptive fields.

Researchers from Yale University and the Gatsby Unit & SWC at UCL have uncovered mechanisms behind the emergence of localized receptive fields. Building on previous work, they describe the principles that drive localization in neural networks. The study addresses challenges in analyzing higher-order input statistics using existing tools that typically assume Gaussianity. By separating the learning process into two distinct stages, the researchers developed analytical equations that capture early-stage learning dynamics in a single-neuron model trained on idealized naturalistic data. The proposed method offers a unique analytical model that succinctly explains the statistical structures driving localization.

The research focuses on a two-layer feedforward neural network with a nonlinear activation function and scalar output. This architecture's ability to learn rich features has made it a critical subject of theoretical neural network analyses, emphasizing its importance in understanding complex learning dynamics. The theoretical framework provides an analytical model for localization in a single-neuron architecture. The researchers identified the necessary and sufficient conditions for localization, initially demonstrated for a binary response scenario. These conditions were later validated empirically for a multi-neuron architecture. Additionally, the proposed architecture fails to learn localized receptive fields when trained on elliptical distributions.

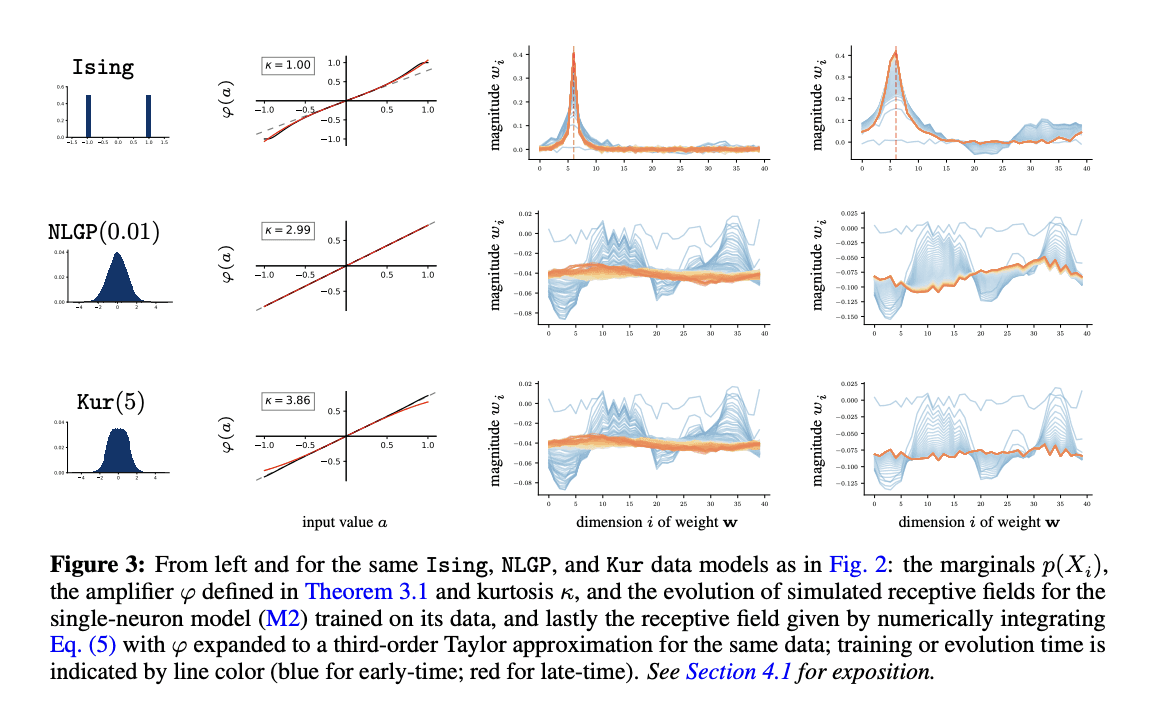

The findings offer valuable insights into the localization of neural network weights. When the parameters NLGP(g) and Kur(k) produce negative excess kurtosis, the Inverse Participation Ratio (IPR) approaches 1.0, indicating highly localized weights. On the other hand, positive excess kurtosis results in an IPR near zero, suggesting non-localized weight distributions. In the Ising model, the integrated receptive field aligns with the simulated field's peak position in 93% of initial conditions (26 out of 28), highlighting excess kurtosis as a key driver of localization, largely independent of other data distribution properties.

In conclusion, researchers emphasize the important role of the analytical approach in understanding the emergence of localization in neural receptive fields. This method aligns with recent studies that position data-distributional properties as a key mechanism for complex behavioral patterns. By analyzing the dynamics effectively, the researchers found that specific data properties, particularly covariance structures and marginals, are fundamental in shaping localization in neural receptive fields. The researchers also noted that the current data model serves as a simplified abstraction of early sensory systems, acknowledging its limitations, such as the inability to capture orientation or phase selectivity. These insights pave the way for future research, including noise-based frameworks and expanded computational models.

In neural networks, particularly convolutional neural networks (CNNs), a receptive field refers to the specific region of the input data that a neuron or unit responds to. This concept is crucial for understanding how networks process localized information. Each neuron in a CNN is connected to a small, localized area of the input, allowing it to detect specific features such as edges, textures, or patterns within that region. As information progresses through deeper layers of the network, neurons aggregate information from larger portions of the input, enabling the network to recognize more complex and abstract features. This hierarchical processing is fundamental to the network's ability to understand and interpret visual data.

In deep learning, particularly within convolutional neural networks (CNNs), the concept of receptive fields is fundamental to the network's ability to process and understand spatial hierarchies in data. A receptive field refers to the specific region of the input data that a particular neuron or unit in the network responds to. This localized processing enables the network to detect and interpret various features, such as edges, textures, and patterns, which are essential for tasks like image recognition and classification.

The importance of receptive fields in deep learning models cannot be overstated. By focusing on localized regions of the input, neurons can efficiently capture spatial hierarchies and patterns. This localized processing is crucial for tasks like image recognition, where detecting edges, textures, and patterns is essential.

Furthermore, the design and optimization of receptive fields are critical for the performance of deep learning models. Adjusting the size and structure of receptive fields can influence the network's ability to generalize and perform across various tasks. For instance, in image recognition, a well-designed receptive field allows the network to effectively capture spatial hierarchies and patterns, leading to improved performance. Conversely, an inadequately designed receptive field can result in the network missing essential features, thereby reducing its effectiveness.

In summary, receptive fields are a cornerstone of deep learning architectures, enabling models to process and understand spatial hierarchies in data. Their design and optimization are crucial for the efficiency and effectiveness of deep learning models, directly impacting their performance across various tasks.

Theoretical Foundations

The concept of receptive fields in neural networks draws significant inspiration from the human visual system, particularly the organization of simple cells in the primary visual cortex. In the visual cortex, simple cells are specialized neurons that respond to specific features of the visual input, such as edges, orientations, and spatial frequencies. These cells have receptive fields that are elongated and respond optimally to stimuli of a particular orientation and position within their receptive field. This organization allows the visual system to detect and process basic visual elements, which are then integrated to form more complex visual perceptions.

In artificial neural networks, particularly convolutional neural networks (CNNs), the concept of receptive fields is emulated to enable the network to process localized information effectively. Just as simple cells in the visual cortex respond to specific orientations and positions, neurons in CNNs are designed to respond to localized regions of the input data. This design allows the network to detect basic features such as edges and textures, which are essential for tasks like image recognition and classification. By emulating the hierarchical organization of the visual cortex, CNNs can build up complex representations from simple features, mirroring the way the human visual system processes visual information.

The hierarchical structure of receptive fields in the visual cortex, where simple cells feed into complex cells and then to higher-order neurons, has also influenced the design of neural network architectures. This hierarchical processing allows for the detection of increasingly complex features at higher levels of the network, similar to how the visual cortex processes information from simple features to complex visual perceptions. By incorporating this hierarchical structure, neural networks can achieve a high level of abstraction and generalization, leading to improved performance in tasks such as object detection and image segmentation.

In summary, the organization of simple cells in the visual cortex has profoundly influenced the development of receptive field concepts in neural networks. By emulating the hierarchical and localized processing found in the human visual system, artificial neural networks can effectively process and interpret visual information, leading to advancements in deep learning and neural processing.

In neural networks, particularly convolutional neural networks (CNNs), the concept of receptive fields is inspired by the human visual system, specifically the organization of simple cells in the visual cortex. These simple cells respond to specific features such as edges, orientations, and spatial frequencies within a localized region of the visual field. This localized processing enables the visual system to detect and interpret basic visual elements, which are then integrated to form more complex visual perceptions.

Similarly, in artificial neural networks, receptive fields allow neurons to focus on localized regions of the input data, enabling the detection of basic features like edges and textures. This hierarchical processing mirrors the way the human visual system processes information from simple features to complex visual perceptions.

Furthermore, techniques like Sparse Coding and Independent Component Analysis (ICA) are employed to generate efficient input signal representations by optimizing explicit sparsity or independence criteria within critically parameterized regimes. Sparse coding involves representing data as a sparse linear combination of basis functions, allowing for efficient data representation and feature extraction. ICA, on the other hand, seeks to decompose multivariate signals into additive, independent components, facilitating the identification of underlying sources in the data. These methods are closely related and have been applied in various domains, including image processing and neural network design, to enhance the efficiency and effectiveness of data representation and feature extraction.

By integrating these biologically inspired concepts and techniques, neural networks can achieve more efficient and effective processing of complex data, leading to improved performance in tasks such as image recognition, signal processing, and other applications.

Emergence of Localized Receptive Fields in Neural Networks

In neural networks, particularly convolutional neural networks (CNNs), the development of localized receptive fields during training is influenced by the statistical properties of the input data. Natural images, for instance, exhibit non-Gaussian statistics, meaning they contain higher-order correlations and structures beyond simple Gaussian noise. These non-Gaussian features are crucial for the emergence of localized receptive fields in neural networks.

Research indicates that when neural networks are trained on data models that approximate the structure of natural images, localized receptive fields can develop without explicit top-down efficiency constraints. This suggests that the inherent non-Gaussian statistics of the input data play a significant role in shaping the learning dynamics of the network, leading to the formation of localized receptive fields.

The presence of non-Gaussian statistics in the input data introduces higher-order correlations that the network can exploit during training. These correlations enable the network to detect and represent complex patterns and structures within the data, facilitating the emergence of localized receptive fields. This process aligns with the network's ability to adapt to the statistical properties of the input, leading to more efficient and effective feature representation.

Iterative Magnitude Pruning (IMP) is a technique that involves systematically removing the smallest weights from a neural network to achieve sparsity. Recent research has demonstrated that applying IMP to fully connected neural networks (FCNs) can lead to the emergence of local receptive fields (RFs), a feature commonly found in the mammalian visual cortex and convolutional neural networks (CNNs).

The process of IMP begins with a fully connected network where each neuron is connected to every other neuron in adjacent layers. By iteratively pruning the smallest weights, the network gradually reduces its complexity. This pruning process encourages the network to focus on the most significant connections, leading to the development of localized patterns in the connectivity structure. These localized patterns resemble the receptive fields observed in CNNs and the visual cortex, where neurons respond to specific regions of the input space.

The emergence of local receptive fields through IMP suggests that pruning not only reduces the number of parameters but also induces a form of inductive bias that promotes localized processing. This finding aligns with observations in CNNs, where the architecture inherently supports the development of localized receptive fields due to its design. The ability of IMP to induce similar structures in FCNs highlights the potential of pruning techniques to shape the network's architecture in a manner that enhances its ability to process spatial information effectively.

In summary, applying Iterative Magnitude Pruning to fully connected neural networks facilitates the emergence of local receptive fields, a feature characteristic of both the mammalian visual cortex and convolutional neural networks. This process underscores the role of pruning in not only reducing network complexity but also in shaping the network's architecture to enhance its processing capabilities.

Implications for Deep Learning and Neural Processing

In deep learning, particularly within convolutional neural networks (CNNs), the concept of receptive fields is fundamental to the network's ability to process and understand spatial hierarchies in data. A receptive field refers to the specific region of the input data that a particular neuron or unit in the network responds to. This localized processing enables the network to detect and interpret various features, such as edges, textures, and patterns, which are essential for tasks like image recognition and classification.

The importance of receptive fields in deep learning models cannot be overstated. By focusing on localized regions of the input, neurons can efficiently capture spatial hierarchies and patterns. This localized processing is crucial for tasks like image recognition, where detecting edges, textures, and patterns is essential. By focusing on localized regions of the input, neurons can efficiently capture spatial hierarchies and patterns. This localized processing is crucial for tasks like image recognition, where detecting edges, textures, and patterns is essential.

Furthermore, the design and optimization of receptive fields are critical for the performance of deep learning models. Adjusting the size and structure of receptive fields can influence the network's ability to generalize and perform across various tasks. For instance, in image recognition, a well-designed receptive field allows the network to effectively capture spatial hierarchies and patterns, leading to improved performance. Conversely, an inadequately designed receptive field can result in the network missing essential features, thereby reducing its effectiveness.

In neural network design, particularly within convolutional neural networks (CNNs), the dynamics of receptive fields significantly influence architectural decisions and optimization strategies. A receptive field refers to the specific region of the input data that a particular neuron or unit in the network responds to. This localized processing enables the network to detect and interpret various features, such as edges, textures, and patterns, which are essential for tasks like image recognition and classification.

The size and configuration of receptive fields are determined by architectural components such as kernel sizes, strides, and pooling operations. Adjusting these parameters allows for the control of the receptive field's extent, thereby influencing the network's capacity to capture spatial hierarchies and patterns. For instance, larger kernels or strides can increase the receptive field, enabling the network to capture broader contextual information. Conversely, smaller kernels or strides focus on finer details, enhancing the network's sensitivity to local features.

Recent research has explored methods to optimize receptive field configurations to improve network performance. For example, a study introduced a dynamic receptive field module that simulates the biological visual system's mechanism, allowing for global information-guided responses with minimal increases in parameters and computational cost. This approach highlights the potential of adaptive receptive field designs in enhancing feature extraction capabilities.

Additionally, the concept of receptive fields has been applied to pruning techniques. Applying Iterative Magnitude Pruning (IMP) to fully connected neural networks has been shown to lead to the emergence of local receptive fields, a feature present in both the mammalian visual cortex and CNNs. This finding underscores the impact of pruning strategies on the network's architectural dynamics and feature processing abilities.

In summary, receptive field dynamics are crucial in neural network architecture design and optimization. By understanding and manipulating these dynamics, researchers and practitioners can develop networks that more effectively process and interpret complex data, leading to improved performance across various tasks.

Future Directions

Advancements in understanding the emergence of localized receptive fields in neural networks have the potential to significantly influence future developments in neural network architectures. Receptive fields, which define the specific regions of input data that neurons respond to, are fundamental in enabling networks to process and interpret spatial hierarchies and patterns. A deeper comprehension of how these fields emerge and function can lead to more efficient and effective network designs.

Recent research has explored how neural networks can autonomously develop convolutional structures when trained on translation-invariant data. This self-organizing capability suggests that networks can learn to exploit the inherent symmetries in input data, leading to more efficient feature extraction and representation. Such insights could inform the design of architectures that inherently develop localized receptive fields, reducing the need for manual configuration and potentially enhancing performance across various tasks.

Additionally, studies have investigated the role of non-Gaussian statistics in the development of receptive fields. By understanding how higher-order correlations in input data influence receptive field formation, researchers can design networks that more effectively capture complex patterns and structures. This knowledge could lead to architectures that are better suited to handle the statistical properties of natural data, improving their generalization and robustness.

Furthermore, the application of pruning techniques like Iterative Magnitude Pruning (IMP) has been shown to induce the emergence of localized receptive fields in fully connected neural networks. This finding highlights the impact of network optimization strategies on the development of receptive fields and suggests that pruning can be a valuable tool in shaping network architectures to enhance feature processing capabilities.

Advancements in understanding the emergence of localized receptive fields in neural networks have the potential to significantly influence future developments in neural network architectures. Receptive fields, which define the specific regions of input data that neurons respond to, are fundamental in enabling networks to process and interpret spatial hierarchies and patterns. A deeper comprehension of how these fields emerge and function can lead to more efficient and effective network designs.

Recent research has explored how neural networks can autonomously develop convolutional structures when trained on translation-invariant data. This self-organizing capability suggests that networks can learn to exploit the inherent symmetries in input data, leading to more efficient feature extraction and representation. Such insights could inform the design of architectures that inherently develop localized receptive fields, reducing the need for manual configuration and potentially enhancing performance across various tasks.

Additionally, studies have investigated the role of non-Gaussian statistics in the development of receptive fields. By understanding how higher-order correlations in input data influence receptive field formation, researchers can design networks that more effectively capture complex patterns and structures. This knowledge could lead to architectures that are better suited to handle the statistical properties of natural data, improving their generalization and robustness.

Furthermore, the application of pruning techniques like Iterative Magnitude Pruning (IMP) has been shown to induce the emergence of localized receptive fields in fully connected neural networks. This finding highlights the impact of network optimization strategies on the development of receptive fields and suggests that pruning can be a valuable tool in shaping network architectures to enhance feature processing capabilities.

Conclusion

Receptive fields are fundamental to neural networks, particularly in convolutional neural networks (CNNs), as they define the specific regions of input data that neurons respond to. This localized processing enables networks to detect and interpret various features, such as edges, textures, and patterns, which are essential for tasks like image recognition and classification.

Understanding the dynamics of receptive fields is crucial for designing efficient and effective neural network architectures. By focusing on localized regions of the input, neurons can efficiently capture spatial hierarchies and patterns. This localized processing is crucial for tasks like image recognition, where detecting edges, textures, and patterns is essential. Adjusting the size and structure of receptive fields can influence the network's ability to generalize and perform across various tasks. For instance, in image recognition, a well-designed receptive field allows the network to effectively capture spatial hierarchies and patterns, leading to improved performance. Conversely, an inadequately designed receptive field can result in the network missing essential features, thereby reducing its effectiveness.

Recent research has explored methods to optimize receptive field configurations to improve network performance. For example, a study introduced a dynamic receptive field module that simulates the biological visual system's mechanism, allowing for global information-guided responses with minimal increases in parameters and computational cost. This approach highlights the potential of adaptive receptive field designs in enhancing feature extraction capabilities.

Additionally, the concept of receptive fields has been applied to pruning techniques. Applying Iterative Magnitude Pruning (IMP) to fully connected neural networks has been shown to lead to the emergence of local receptive fields, a feature present in both the mammalian visual cortex and CNNs. This finding underscores the impact of pruning strategies on the network's architectural dynamics and feature processing abilities.

In summary, receptive fields are a cornerstone of deep learning architectures, enabling models to process and understand spatial hierarchies in data. Their design and optimization are crucial for the efficiency and effectiveness of deep learning models, directly impacting their performance across various tasks.

Understanding the emergence of localized receptive fields in neural networks is pivotal for advancing deep learning and neural processing. Receptive fields define the specific regions of input data that neurons respond to, enabling networks to detect and interpret various features essential for tasks like image recognition and classification. A deeper comprehension of how these fields emerge and function can lead to more efficient and effective network designs.

Recent research has explored methods to optimize receptive field configurations to improve network performance. For example, a study introduced a dynamic receptive field module that simulates the biological visual system's mechanism, allowing for global information-guided responses with minimal increases in parameters and computational cost. This approach highlights the potential of adaptive receptive field designs in enhancing feature extraction capabilities.

Additionally, the concept of receptive fields has been applied to pruning techniques. Applying Iterative Magnitude Pruning (IMP) to fully connected neural networks has been shown to lead to the emergence of local receptive fields, a feature present in both the mammalian visual cortex and CNNs. This finding underscores the impact of network optimization strategies on the development of receptive fields and suggests that pruning can be a valuable tool in shaping network architectures to enhance feature processing capabilities.

In summary, a deeper understanding of receptive field emergence holds promise for advancing neural network architectures. By leveraging insights from recent research, future developments could lead to more adaptive, efficient, and robust networks capable of effectively processing complex data.

Press contact

Timon Harz

oneboardhq@outlook.com

Other posts

Company

About

Blog

Careers

Press

Legal

Privacy

Terms

Security