Timon Harz

December 17, 2024

Technology Innovation Institute Launches Falcon 3: Open-Source AI Models with 30 New Checkpoints (1B to 10B)

Falcon 3 introduces a new era in small AI models, delivering powerful performance and multilingual support. Its open-source nature makes it an ideal tool for developers and businesses looking to harness advanced AI capabilities.

Advancing Large Language Models: The Launch of Falcon 3 by TII-UAE

Large language models (LLMs) have revolutionized industries, enabling advancements from automated content creation to breakthroughs in scientific research. Yet, significant challenges persist. Proprietary high-performing models limit transparency and access for researchers and developers. Open-source options, while promising, often face trade-offs between computational efficiency and scalability. Additionally, the lack of language diversity in many models hampers their usability across different regions and applications. These obstacles underscore the urgent need for open, efficient, and adaptable LLMs that excel across diverse use cases without incurring excessive costs.

Introducing Falcon 3: TII's Answer to Open-Source AI Challenges

The Technology Innovation Institute (TII) UAE has tackled these challenges head-on with the release of Falcon 3, the latest iteration in their groundbreaking open-source LLM series. Falcon 3 features 30 model checkpoints, ranging from 1 billion to 10 billion parameters, offering versatility for various applications. The release includes base models, instruction-tuned models, and multiple quantized versions such as GPTQ-Int4, GPTQ-Int8, AWQ, and a cutting-edge 1.58-bit variant designed for maximum efficiency.

One standout feature of Falcon 3 is the introduction of Mamba-based models, leveraging state-space models (SSMs) to deliver superior inference speed and performance. This innovation positions Falcon 3 as a practical solution for developers seeking scalable and efficient LLMs.

A Commitment to Open Access and Integration

Falcon 3 is released under the TII Falcon-LLM License 2.0, ensuring commercial usage and accessibility for businesses and developers. Furthermore, its compatibility with the Llama architecture simplifies integration into existing workflows, reducing barriers to adoption and expanding its potential impact.

With Falcon 3, TII continues to lead in creating open-source AI tools that bridge performance, accessibility, and efficiency, setting a new benchmark for the development of large language models.

Technical Details and Key Benefits of Falcon 3

Falcon 3 models are trained on an extensive dataset of 14 trillion tokens, representing a significant upgrade over previous versions. This large-scale training enhances the models’ generalization capabilities, ensuring consistent performance across a wide range of tasks. With support for a 32K context length (8K for the 1B model), Falcon 3 efficiently handles longer inputs, making it ideal for applications like summarization, document analysis, and chat-based systems.

Optimized Architecture for Performance and Efficiency

Falcon 3 retains its Transformer-based architecture with 40 decoder blocks and employs grouped-query attention (GQA) with 12 query heads. This design maximizes computational efficiency and minimizes latency during inference without compromising accuracy. Additionally, the introduction of 1.58-bit quantized models enables Falcon 3 to operate on hardware-constrained devices, offering a cost-effective solution for resource-sensitive deployments.

Enhanced Multilingual Support

Addressing the demand for multilingual capabilities, Falcon 3 supports four languages: English, French, Spanish, and Portuguese. This enhancement broadens its usability, making it more inclusive and practical for diverse global audiences.

Results and Benchmarks

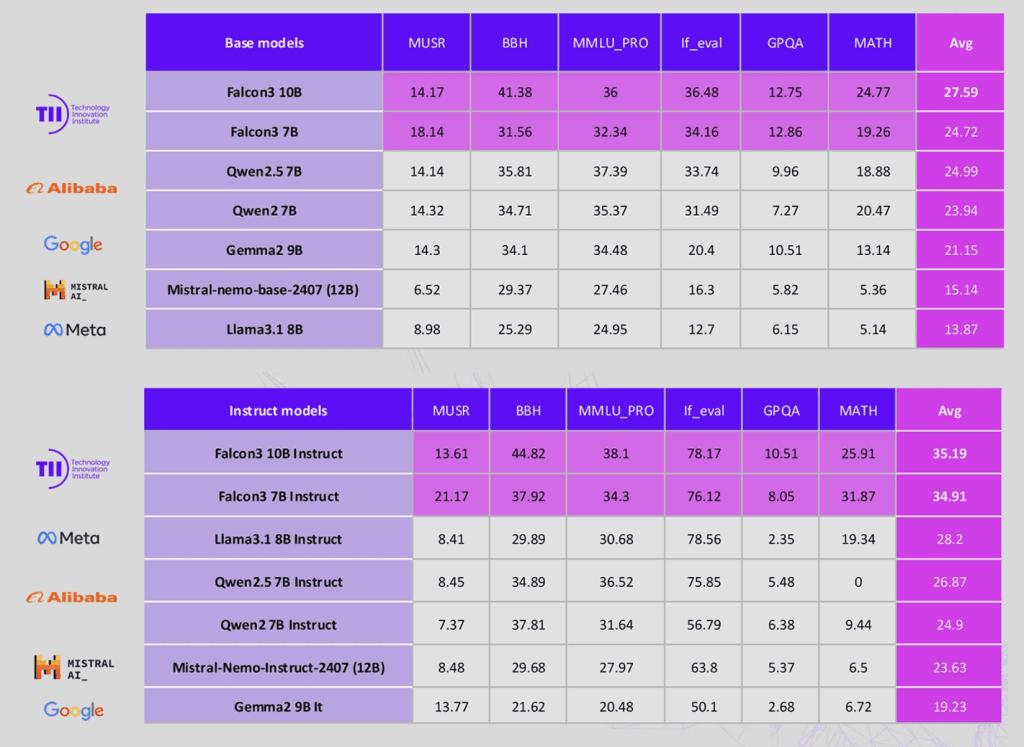

Falcon 3 demonstrates strong performance across key evaluation datasets:

GSM8K: Scored 83.1%, highlighting advanced mathematical reasoning and problem-solving skills.

IFEval: Achieved 78%, showcasing robust instruction-following abilities.

MMLU: Scored 71.6%, reflecting comprehensive general knowledge across multiple domains.

With these improvements, Falcon 3 sets a new standard for efficiency, inclusivity, and scalability in the realm of large language models.

Falcon 3: A Competitive and Accessible Open-Source LLM

Falcon 3 demonstrates strong competitiveness with other leading large language models (LLMs), with its open availability setting it apart. The expansion from 7B to 10B parameters has enhanced performance, particularly in tasks requiring complex reasoning and multitask understanding. Meanwhile, the quantized versions maintain similar capabilities while significantly reducing memory requirements, making them ideal for deployment in resource-constrained environments.

Accessibility and Integration

Falcon 3 is readily available on Hugging Face, providing developers and researchers with easy access to experiment, fine-tune, and deploy the models. Compatibility with popular formats such as GGUF and GPTQ ensures seamless integration into existing workflows and toolchains, reducing barriers to adoption.

Falcon 3 is a significant advancement in the open-source LLM landscape. With 30 model checkpoints—including base, instruction-tuned, quantized, and Mamba-based variants—it offers exceptional flexibility for diverse use cases. The model’s strong benchmark performance, efficiency, and multilingual support make it an invaluable tool for developers and researchers alike.

By focusing on accessibility and commercial usability, the Technology Innovation Institute UAE has established Falcon 3 as a practical, high-performing LLM ready for real-world applications. As AI adoption accelerates, Falcon 3 exemplifies how open, efficient, and inclusive models can empower innovation and unlock opportunities across industries.

The Technology Innovation Institute (TII), situated in Abu Dhabi, United Arab Emirates, stands as a premier global research center dedicated to advancing the frontiers of knowledge and technology. Established under the aegis of the Abu Dhabi Government's Advanced Technology Research Council (ATRC), TII serves as the applied research pillar of ATRC, focusing on transformative technologies that address real-world challenges.

TII's mission centers on fostering innovation through a multidisciplinary approach, bringing together a diverse team of scientists, researchers, and engineers from around the world. This collaborative environment is designed to be open, flexible, and agile, enabling the institute to swiftly adapt to emerging technological trends and challenges.

The institute comprises ten specialized research centers, each dedicated to a specific domain of advanced technology:

Advanced Materials Research Center (AMRC): Focuses on developing new materials with unique properties for various applications.

AI and Digital Science Research Center (AIDRC): Concentrates on artificial intelligence and digital technologies to drive innovation in multiple sectors.

Autonomous Robotics Research Center (ARRC): Works on the development of autonomous robotic systems for diverse applications.

Biotechnology Research Center (BRC): Engages in cutting-edge research in biotechnology to address health and environmental challenges.

Cryptography Research Center (CRC): Specializes in developing advanced cryptographic techniques to enhance security in digital communications.

Directed Energy Research Center (DERC): Investigates directed energy technologies for defense and civilian uses.

Propulsion and Space Research Center (PSRC): Focuses on propulsion technologies and space exploration initiatives.

Quantum Research Center (QRC): Dedicated to quantum computing and related technologies.

Renewable and Sustainable Energy Research Center (RSERC): Works on sustainable energy solutions to address global energy needs.

Secure Systems Research Center (SSRC): Develops secure systems to protect information and infrastructure from cyber threats.

Each center operates with a high degree of autonomy, allowing for focused research and development in their respective fields. This structure enables TII to cover a broad spectrum of technological domains, ensuring comprehensive contributions to the global scientific community.

TII's commitment to open-source initiatives is exemplified by its development of advanced AI models, such as the Falcon series. These models are designed to be accessible to researchers and developers worldwide, fostering a collaborative environment that accelerates technological progress. The recent release of Falcon 3, featuring 30 new model checkpoints ranging from 1 billion to 10 billion parameters, underscores TII's dedication to pushing the boundaries of AI research and making sophisticated tools available to a broader audience.

In addition to its research endeavors, TII places a strong emphasis on the practical application of its innovations. By bridging the gap between theoretical research and real-world implementation, the institute ensures that its technological advancements have a tangible impact on industries and society at large. This approach aligns with the UAE's broader strategy to position itself as a global leader in technology and innovation.

TII's strategic location in Abu Dhabi allows it to leverage the UAE's robust infrastructure and forward-thinking policies that support research and development. The institute collaborates with international partners, including academic institutions, industry leaders, and government agencies, to enhance its research capabilities and extend its global reach.

Through its comprehensive research programs and commitment to innovation, the Technology Innovation Institute continues to contribute significantly to the global scientific community, driving advancements that address some of the most pressing challenges of our time.

The Technology Innovation Institute (TII) has unveiled Falcon 3, the latest advancement in its series of open-source large language models (LLMs). This release introduces a suite of models with parameter sizes of 1B, 3B, 7B, and 10B, each available in base and instruction-tuned versions, along with quantized variants optimized for deployment on resource-constrained systems.

Falcon 3 is engineered to operate efficiently on light infrastructures, including laptops, without compromising performance. This design choice democratizes access to advanced AI capabilities, enabling a broader spectrum of users to leverage sophisticated language models in various applications.

A significant enhancement in Falcon 3 is its support for multiple languages, specifically English, French, Spanish, and Portuguese. This multilingual capability broadens the model's applicability across diverse linguistic contexts, facilitating more inclusive AI solutions.

Upon its release, Falcon 3 achieved the top position on Hugging Face's global third-party LLM leaderboard, surpassing other open-source models of similar size, including Meta's Llama variants. Notably, the Falcon 3-10B model leads its category, outperforming all models under 13 billion parameters.

This achievement underscores Falcon 3's significance in the AI community, setting new performance standards for small LLMs and exemplifying TII's commitment to advancing open-source AI research. By providing high-performance models that are accessible and efficient, TII continues to foster innovation and collaboration within the global AI ecosystem.

Overview of Falcon 3

The Falcon 3 series, developed by the Technology Innovation Institute (TII), represents a significant advancement in open-source AI models. This family comprises 30 distinct model checkpoints, with parameter sizes ranging from 1 billion (1B) to 10 billion (10B). Each model is available in various configurations, including base versions, instruction-tuned variants, and quantized formats such as GPTQ-Int4, GPTQ-Int8, AWQ, and an innovative 1.58-bit version designed for efficiency.

The diverse range of model sizes and configurations within the Falcon 3 family caters to a wide array of applications and computational requirements. Smaller models, like the 1B and 3B parameter versions, are optimized for deployment on resource-constrained devices, including laptops and edge devices, enabling the integration of advanced AI capabilities into applications where computational resources are limited. Conversely, the larger models, such as the 7B and 10B parameter versions, offer enhanced performance suitable for more demanding tasks, including complex natural language understanding and generation.

A notable feature of the Falcon 3 models is their support for a 32K context length (8K for the 1B variant), allowing them to handle longer inputs efficiently. This capability is particularly beneficial for tasks like summarization, document processing, and chat-based applications.

The inclusion of instruction-tuned models within the Falcon 3 family enhances their ability to follow specific instructions and perform tasks with higher accuracy, making them suitable for applications requiring precise control over AI behavior. Additionally, the quantized versions of these models significantly reduce memory requirements, facilitating deployment in environments with limited hardware resources without compromising performance.

By offering a comprehensive suite of models with varying parameter sizes and configurations, the Falcon 3 family provides developers and researchers with the flexibility to select models that best align with their specific needs, promoting the integration of AI across diverse sectors and applications.

The Falcon 3 series, developed by the Technology Innovation Institute (TII), offers a diverse range of models tailored to various AI applications. This series includes both base models and instruction-tuned variants, enhancing their adaptability across different tasks.

Instruction-tuned models are fine-tuned on datasets designed to improve their ability to follow specific instructions, making them more effective in applications requiring precise task execution. This tuning process enhances the models' performance in generating contextually appropriate and accurate responses, thereby broadening their utility in real-world scenarios.

In addition to the base and instruction-tuned models, Falcon 3 introduces quantized versions utilizing techniques such as GPTQ-Int4 and GPTQ-Int8. Quantization reduces the precision of the model's weights, leading to decreased memory usage and computational demands without significantly compromising performance. GPTQ (Generalized Quantization) is a method that allows language models to be quantized to lower precision levels, facilitating deployment on devices with limited hardware resources.

A notable innovation in the Falcon 3 series is the introduction of a 1.58-bit quantized variant. This ultra-low precision model represents a significant advancement in model efficiency, enabling the deployment of sophisticated AI capabilities on even more constrained hardware environments. Such advancements are crucial for expanding the accessibility of AI technologies, particularly in settings where computational resources are at a premium.

By offering a spectrum of models—including base, instruction-tuned, and various quantized versions—Falcon 3 provides developers and researchers with the flexibility to select models that best align with their specific requirements. This versatility facilitates the integration of advanced AI functionalities across a wide range of applications and platforms, promoting broader adoption and innovation within the AI community.

The Falcon 3 series introduces Mamba-based models that utilize state-space models (SSMs) to enhance inference speed and performance.

Traditional Transformer architectures, which rely on attention mechanisms, often face challenges with computational efficiency, especially as sequence lengths increase. In contrast, SSMs offer a more efficient approach by maintaining linear computational complexity relative to sequence length. This efficiency enables Mamba-based models to process longer sequences more rapidly and with reduced memory requirements compared to their Transformer-based counterparts.

The Mamba architecture's attention-free design allows for constant inference costs, regardless of context length. This characteristic is particularly advantageous for applications involving extensive textual data, such as document summarization and real-time translation, where maintaining consistent performance across varying input sizes is crucial.

By integrating Mamba-based models into the Falcon 3 lineup, TII provides developers and researchers with access to cutting-edge AI tools that deliver high performance while optimizing computational resources. This advancement supports the development of more efficient AI-driven solutions across diverse industries and applications.

Key Features and Innovations

The Falcon 3 series represents a significant advancement in AI model training, having been trained on an extensive dataset of 14 trillion tokens. This substantial increase in training data—more than double the 5.5 trillion tokens used for its predecessor, Falcon 2—has led to notable improvements in the model's performance across various benchmarks.

Training on a larger and more diverse dataset enables Falcon 3 to develop a deeper understanding of language patterns, nuances, and contextual relationships. This comprehensive training enhances the model's ability to generalize across a wide range of tasks, resulting in superior performance in areas such as reasoning, language understanding, instruction following, code generation, and mathematical problem-solving.

The impact of this extensive training is evident in Falcon 3's benchmark achievements. Upon its release, Falcon 3 secured the top position on Hugging Face's global third-party LLM leaderboard, surpassing other open-source models of similar size, including Meta's Llama variants. Notably, the Falcon 3-10B model leads its category, outperforming all models under 13 billion parameters.

These benchmark results highlight Falcon 3's enhanced capabilities and its potential to set new standards in AI performance. The model's ability to operate efficiently on light infrastructures, including laptops, further underscores its versatility and accessibility for a broad spectrum of applications.

The Falcon 3 series, developed by the Technology Innovation Institute (TII), represents a significant advancement in artificial intelligence, particularly in making sophisticated AI capabilities accessible to a broader audience. One of the most notable features of Falcon 3 is its ability to operate efficiently on light infrastructures, including laptops, without compromising performance.

This efficiency is achieved through meticulous optimization of the model's architecture and training processes. By reducing the computational demands typically associated with large language models, Falcon 3 enables users to leverage advanced AI functionalities without the need for high-end hardware. This democratization of AI technology allows individuals and organizations with limited computational resources to benefit from cutting-edge AI capabilities.

The Falcon 3 series includes four scalable models—1B, 3B, 7B, and 10B parameters—each designed to cater to different application needs. These models are available in both base and instruction-tuned versions, enhancing their versatility across various tasks. The instruction-tuned variants are particularly adept at understanding and executing specific instructions, making them valuable tools for a wide range of applications.

In addition to their efficiency, Falcon 3 models support multiple languages, including English, French, Spanish, and Portuguese, broadening their applicability in diverse linguistic contexts. The models also offer quantized versions, which are optimized for rapid deployment and integration into specialized architectures, ensuring that AI capabilities are accessible even in resource-constrained environments.

The release of Falcon 3 underscores TII's commitment to advancing AI technology and making it accessible to a global audience. By enabling the operation of powerful AI models on light infrastructures, Falcon 3 sets a new standard for AI accessibility, empowering users worldwide to harness the potential of advanced artificial intelligence.

The Falcon 3 series, developed by the Technology Innovation Institute (TII), introduces models with an extended context length of up to 32,000 tokens, significantly enhancing their capacity to process and understand lengthy texts. This capability is particularly advantageous for tasks such as summarization, document processing, and chat-based applications, where maintaining coherence over extended inputs is essential.

The 1B variant of Falcon 3 supports a context length of 8,000 tokens, which, while shorter than the 32,000-token capacity of larger models, still offers substantial improvement over models with more limited context windows. This extended context length enables the 1B variant to handle moderately lengthy inputs effectively, making it suitable for a wide range of applications that require processing of substantial textual data.

The ability to manage longer contexts allows Falcon 3 models to maintain coherence and relevance over extended passages of text, reducing the need for frequent restarts or truncation of input data. This feature is particularly beneficial in scenarios where understanding the full context is crucial, such as in legal document analysis, scientific research, and comprehensive content generation.

By supporting extended context lengths, Falcon 3 models enhance the efficiency and effectiveness of AI-driven solutions across various domains, enabling more accurate and contextually aware outputs. This advancement represents a significant step forward in the development of large language models, broadening their applicability and utility in real-world applications.

The Falcon 3 series, developed by the Technology Innovation Institute (TII), represents a significant advancement in artificial intelligence, particularly in making sophisticated AI capabilities accessible to a broader audience. One of the most notable features of Falcon 3 is its ability to operate efficiently on light infrastructures, including laptops, without compromising performance.

This efficiency is achieved through meticulous optimization of the model's architecture and training processes. By reducing the computational demands typically associated with large language models, Falcon 3 enables users to leverage advanced AI functionalities without the need for high-end hardware. This democratization of AI technology allows individuals and organizations with limited computational resources to benefit from cutting-edge AI capabilities.

The Falcon 3 series includes four scalable models—1B, 3B, 7B, and 10B parameters—each designed to cater to different application needs. These models are available in both base and instruction-tuned versions, enhancing their versatility across various tasks. The instruction-tuned variants are particularly adept at understanding and executing specific instructions, making them valuable tools for a wide range of applications.

In addition to their efficiency, Falcon 3 models support multiple languages, including English, French, Spanish, and Portuguese, broadening their applicability in diverse linguistic contexts. The models also offer quantized versions, which are optimized for rapid deployment and integration into specialized architectures, ensuring that AI capabilities are accessible even in resource-constrained environments.

The release of Falcon 3 underscores TII's commitment to advancing AI technology and making it accessible to a global audience. By enabling the operation of powerful AI models on light infrastructures, Falcon 3 sets a new standard for AI accessibility, empowering users worldwide to harness the potential of advanced artificial intelligence.

Performance and Benchmarking

The Falcon 3 series, developed by the Technology Innovation Institute (TII), has achieved a remarkable milestone by securing the top position on Hugging Face's Open LLM Leaderboard upon its release. This accomplishment underscores Falcon 3's exceptional performance and its ability to surpass other open-source large language models (LLMs) of similar size, including Meta's Llama variants.

Hugging Face's Open LLM Leaderboard serves as a comprehensive platform for evaluating and comparing the performance of various open-source LLMs across multiple benchmarks. Models are assessed on tasks such as text generation, question-answering, and reasoning, providing a transparent and standardized metric for AI model performance. Achieving the number one position on this leaderboard signifies that Falcon 3 outperforms its peers in these critical areas, highlighting its advanced capabilities and efficiency.

The Falcon 3 series comprises models with parameter sizes ranging from 1 billion to 10 billion, designed to cater to diverse application needs. These models are available in both base and instruction-tuned versions, enhancing their versatility across various tasks. The instruction-tuned variants are particularly adept at understanding and executing specific instructions, making them valuable tools for a wide range of applications. The achievement of the top position on the Hugging Face leaderboard by Falcon 3, especially considering its parameter size, underscores the model's efficiency and effectiveness in handling complex tasks.

This accomplishment is particularly noteworthy when compared to other open-source models of similar size, such as Meta's Llama variants. While these models have made significant contributions to the AI community, Falcon 3's performance on the Hugging Face leaderboard demonstrates its superior capabilities in understanding and generating human-like text. This positions Falcon 3 as a leading choice for developers and researchers seeking high-performance AI models for a variety of applications.

The success of Falcon 3 on the Hugging Face leaderboard reflects the rigorous training and optimization processes undertaken by TII. Trained on an extensive dataset of 14 trillion tokens, Falcon 3 has been fine-tuned to achieve high performance across a range of benchmarks. This extensive training enables Falcon 3 to understand and generate human-like text with remarkable accuracy, making it a valuable tool for tasks such as text generation, summarization, and question-answering.

In summary, Falcon 3's achievement of the number one position on Hugging Face's Open LLM Leaderboard highlights its exceptional performance and positions it as a leading open-source AI model in the current landscape. Its ability to surpass other models, including Meta's Llama variants, underscores its advanced capabilities and efficiency, making it a valuable asset for a wide range of applications in the AI community.

The Falcon 3-10B model, developed by the Technology Innovation Institute (TII), has achieved a significant milestone by leading its category and outperforming all models under 13 billion parameters. This accomplishment underscores Falcon 3-10B's exceptional performance and positions it as a leading open-source large language model (LLM) in its class.

Upon its release, Falcon 3-10B secured the top position on Hugging Face's global third-party LLM leaderboard, surpassing other open-source models of similar size, including Meta's Llama variants. This achievement highlights Falcon 3-10B's advanced capabilities and efficiency in handling complex tasks.

The Falcon 3 series includes models with parameter sizes ranging from 1 billion to 10 billion, designed to cater to diverse application needs. The 10B variant, in particular, has demonstrated superior performance across various benchmarks, including reasoning, instruction following, code generation, and mathematics tasks. This positions Falcon 3-10B as a valuable tool for developers and researchers seeking high-performance AI models for a wide range of applications.

The success of Falcon 3-10B on the Hugging Face leaderboard reflects the rigorous training and optimization processes undertaken by TII. Trained on an extensive dataset of 14 trillion tokens, Falcon 3-10B has been fine-tuned to achieve high performance across a range of benchmarks. This extensive training enables Falcon 3-10B to understand and generate human-like text with remarkable accuracy, making it a valuable tool for tasks such as text generation, summarization, and question-answering.

In summary, Falcon 3-10B's achievement of leading its category and outperforming all models under 13 billion parameters underscores its exceptional performance and positions it as a leading open-source AI model in the current landscape. Its ability to surpass other models, including Meta's Llama variants, highlights its advanced capabilities and efficiency, making it a valuable asset for a wide range of applications in the AI community.

Implications for the AI Community

The Falcon 3 series, developed by the Technology Innovation Institute (TII), has set new performance standards for small large language models (LLMs), offering a remarkable balance between computational efficiency and high performance. This achievement is particularly significant given the increasing demand for AI models that can operate effectively on resource-constrained environments, such as laptops and edge devices.

One of the standout features of Falcon 3 is its ability to deliver high performance while maintaining a smaller model size. This efficiency is achieved through meticulous optimization of the model's architecture and training processes, enabling it to perform complex tasks with reduced computational resources. Such optimization is crucial for applications where computational power is limited, allowing users to leverage advanced AI capabilities without the need for high-end hardware.

In terms of performance, Falcon 3 has demonstrated superior results across various benchmarks. Notably, it achieved an 83.1% score on GSM8K, a benchmark that measures mathematical reasoning and problem-solving abilities. Additionally, it scored 78% on IFEval, showcasing its instruction-following capabilities, and 71.6% on MMLU, highlighting its solid general knowledge and understanding across domains.

These impressive results position Falcon 3 as a leading choice for developers and researchers seeking high-performance AI models that are both efficient and effective. Its ability to operate on light infrastructures, including laptops, democratizes access to advanced AI capabilities, making it a valuable tool for a wide range of applications.

Furthermore, Falcon 3's support for multiple languages, including English, French, Spanish, and Portuguese, enhances its usability across diverse linguistic contexts. This multilingual support broadens the model's applicability, enabling it to serve a global audience and cater to various language-specific tasks.

The open-source nature of Falcon 3, released under the TII Falcon-LLM License 2.0, plays a pivotal role in ensuring broad accessibility for developers and businesses, thereby fostering innovation and collaboration within the AI community. By adopting a permissive license model, Falcon 3 becomes a valuable resource for a diverse range of users, from independent developers to large enterprises, enabling them to integrate, modify, and deploy the model in various applications.

The TII Falcon-LLM License 2.0 is based on the Apache License Version 2.0, with specific modifications tailored to the objectives of the Technology Innovation Institute (TII). This license framework provides users with the freedom to use, modify, and distribute Falcon 3, while also incorporating an acceptable use policy that promotes the responsible use of AI. This policy ensures that the model is utilized ethically and aligns with best practices in AI development and deployment.

By releasing Falcon 3 under this open-source license, TII democratizes access to advanced AI capabilities, allowing a wide array of stakeholders to benefit from the model's features. Developers can leverage Falcon 3 to build innovative applications, businesses can integrate it into their products and services, and researchers can explore its potential in various domains. This openness not only accelerates the pace of innovation but also encourages a collaborative approach to AI development, where improvements and enhancements are shared across the community.

The open-source release of Falcon 3 also contributes to the broader AI ecosystem by setting a precedent for responsible AI development. The inclusion of an acceptable use policy within the license underscores the importance of ethical considerations in AI deployment, promoting practices that prioritize safety, fairness, and transparency. This approach aligns with global discussions on the need for responsible AI development and sets a standard for other organizations to follow.

Statements from TII Leadership

His Excellency Faisal Al Bannai, Secretary General of the Advanced Technology Research Council (ATRC) and Adviser to the UAE President for Strategic Research and Advanced Technology Affairs, has consistently emphasized the transformative power of artificial intelligence (AI) and the Technology Innovation Institute's (TII) commitment to accessible innovation. Reflecting on the release of Falcon 3, he stated, "The transformative power of AI is undeniable. Today, we advance our contributions to the AI community, particularly the open-source sector, with the release of the Falcon 3 family of text models. This launch builds upon the foundation we established with Falcon 2, marking a significant step toward a new generation of AI models. Our ongoing commitment to ensuring these powerful tools remain accessible to everyone, everywhere, reflects our dedication to global equity and inclusive innovation."

This statement underscores TII's dedication to advancing AI technology and making it accessible to a global audience, fostering innovation and collaboration within the AI community.

Dr. Najwa Aaraj, Chief Executive Officer of the Technology Innovation Institute (TII), has consistently emphasized the institute's dedication to pioneering research and the development of advanced AI models. Reflecting on the release of Falcon 3, she stated, "Our dedication to pioneering research and attracting top-tier talent has culminated in the development of Falcon 3. The result is a model that exemplifies our pursuit of scientific excellence, offering enhanced efficiency and setting new benchmarks in AI technology."

This statement underscores TII's commitment to advancing AI technology and making it accessible to a global audience, fostering innovation and collaboration within the AI community.

Availability and Access

Falcon 3, the latest iteration in the Technology Innovation Institute's (TII) open-source large language model (LLM) series, is now available for immediate download on platforms such as Hugging Face and FalconLLM.TII.ae. This release includes detailed benchmarks, providing users with comprehensive insights into the model's performance across various tasks.

Developers and researchers can access Falcon 3's models directly from Hugging Face, a leading platform for sharing and collaborating on machine learning models. The Falcon 3 collection on Hugging Face offers a range of models, including base and instruction-tuned versions, as well as quantized variants designed for efficient deployment in resource-constrained environments. These models are available in formats compatible with popular machine learning frameworks, facilitating seamless integration into existing workflows.

In addition to Hugging Face, Falcon 3 is accessible through TII's dedicated portal at FalconLLM.TII.ae. This platform provides users with direct access to the models, along with comprehensive documentation and resources to support effective utilization. The website also features detailed benchmarks, allowing users to evaluate the model's performance across various tasks and datasets.

The release of Falcon 3 underscores TII's commitment to advancing AI technology and making it accessible to a global audience. By providing open access to these models, TII fosters innovation and collaboration within the AI community, enabling developers and researchers to build upon the foundation established by Falcon 3. This approach aligns with TII's mission to democratize access to advanced AI capabilities, ensuring that powerful tools are available to everyone, everywhere.

For those interested in exploring Falcon 3's capabilities, the models are available for download and experimentation. Detailed benchmarks are provided to assist users in understanding the model's performance characteristics and potential applications. Whether you're a developer looking to integrate advanced AI into your applications or a researcher aiming to explore the latest advancements in LLMs, Falcon 3 offers a robust and accessible resource to support your endeavors.

The Technology Innovation Institute (TII) has introduced Falcon Playground, an interactive testing environment designed for end-users, programmers, coders, and researchers to explore Falcon 3 prior to its official release. This platform offers a unique opportunity to experiment with the model's capabilities and provide valuable feedback to the development team.

Falcon Playground serves as a dynamic space where users can engage with Falcon 3's features, test its performance across various tasks, and assess its integration potential within different applications. By participating in this testing phase, users contribute to refining the model, ensuring it meets the diverse needs of the AI community.

The feedback gathered through Falcon Playground is instrumental in identifying areas for improvement, optimizing performance, and enhancing user experience. This collaborative approach underscores TII's commitment to developing AI technologies that are both innovative and responsive to user requirements.

To access Falcon Playground and begin exploring Falcon 3, visit the official TII website. Detailed instructions on how to participate, along with guidelines for providing feedback, are available on the platform. Engaging with Falcon Playground not only allows users to experience the capabilities of Falcon 3 firsthand but also positions them at the forefront of AI innovation, contributing to the evolution of cutting-edge technologies.

By offering this testing environment, TII fosters a collaborative ecosystem where developers, researchers, and enthusiasts can collectively advance the field of artificial intelligence. This initiative reflects TII's dedication to open-source development and its belief in the power of community-driven innovation.

In summary, Falcon Playground provides a valuable platform for users to interact with Falcon 3, offering feedback that will shape the model's development and ensure it meets the high standards expected by the AI community. This initiative exemplifies TII's commitment to accessible innovation and its proactive approach to incorporating user insights into the development process.

Conclusion

The release of Falcon 3 marks a significant milestone in the evolution of artificial intelligence, particularly in enhancing both accessibility and performance. Developed by the Technology Innovation Institute (TII), Falcon 3 introduces a series of open-source large language models (LLMs) that set new benchmarks for small AI models. These models are designed to operate efficiently on light infrastructures, including laptops, thereby democratizing access to advanced AI capabilities.

One of the most notable advancements in Falcon 3 is its training on 14 trillion tokens, more than double the 5.5 trillion tokens used for its predecessor. This extensive training enables Falcon 3 to deliver superior performance across various benchmarks, including mathematical reasoning, instruction-following, and general knowledge tasks. For instance, it achieved an 83.1% score on GSM8K, a benchmark for mathematical reasoning, and a 78% score on IFEval, which assesses instruction-following capabilities.

Falcon 3 is available in multiple variants, ranging from 1 billion to 10 billion parameters, and includes both base and instruction-tuned models. The instruction-tuned versions are particularly adept at handling conversational applications, making them versatile tools for a wide range of AI tasks. Additionally, Falcon 3 supports a 32K context length (8K for the 1B variant), allowing it to process longer inputs efficiently—a crucial feature for tasks like summarization and document processing.

The open-source nature of Falcon 3, released under the TII Falcon-LLM License 2.0, ensures broad accessibility for developers and businesses. This approach fosters innovation and collaboration within the AI community, enabling users to integrate Falcon 3 into various applications and workflows without significant overhead.

Furthermore, Falcon 3's multilingual capabilities, supporting languages such as English, French, Spanish, and Portuguese, enhance its usability across diverse linguistic contexts. This inclusivity broadens the potential applications of Falcon 3, making it a valuable tool for global audiences.

In summary, Falcon 3's release signifies a substantial advancement in AI technology, offering enhanced performance, accessibility, and versatility. Its open-source nature and support for multiple languages and infrastructures make it a powerful tool for developers, researchers, and businesses worldwide, setting new standards for small LLMs and contributing to the broader AI community.

The release of Falcon 3 by the Technology Innovation Institute (TII) represents a significant advancement in artificial intelligence, offering a suite of open-source large language models (LLMs) that are both powerful and efficient. These models are designed to operate effectively on light infrastructures, including laptops, thereby democratizing access to advanced AI capabilities.

Falcon 3 introduces 30 model checkpoints ranging from 1 billion to 10 billion parameters, encompassing base and instruction-tuned models, as well as quantized versions like GPTQ-Int4, GPTQ-Int8, and an innovative 1.58-bit variant for enhanced efficiency. This diversity allows users to select models that best fit their specific computational resources and application requirements.

Trained on 14 trillion tokens—more than double its predecessor's 5.5 trillion—Falcon 3 demonstrates superior performance across various benchmarks, including mathematical reasoning, instruction-following, and general knowledge tasks. Notably, it achieved an 83.1% score on GSM8K, a benchmark for mathematical reasoning, and a 78% score on IFEval, which assesses instruction-following capabilities.

The open-source nature of Falcon 3, released under the TII Falcon-LLM License 2.0, ensures broad accessibility for developers and businesses, fostering innovation and collaboration within the AI community. This approach enables users to integrate Falcon 3 into various applications and workflows without significant overhead.

Furthermore, Falcon 3 supports multiple languages, including English, French, Spanish, and Portuguese, enhancing its usability across diverse linguistic contexts. This inclusivity broadens the potential applications of Falcon 3, making it a valuable tool for global audiences.

In summary, Falcon 3's release signifies a substantial advancement in AI technology, offering enhanced performance, accessibility, and versatility. Its open-source nature and support for multiple languages and infrastructures make it a powerful tool for developers, researchers, and businesses worldwide, setting new standards for small LLMs and contributing to the broader AI community.

We encourage you to explore Falcon 3 and consider its potential applications in your respective fields. Whether you're involved in natural language processing, content generation, or any other AI-driven domain, Falcon 3 offers a robust and accessible resource to support your endeavors. By leveraging Falcon 3, you can contribute to the ongoing evolution of AI technologies and harness their capabilities to drive innovation and efficiency in your projects.

References and Sources

Technology Innovation Institute (TII). (2024, December 17). Falcon 3: UAE’s Technology Innovation Institute Launches World’s Most Powerful Small AI Models that Can Also Be Run on Light Infrastructures Including Laptops. Retrieved from tii.ae

MarkTechPost. (2024, December 17). Technology Innovation Institute (TII) UAE Just Released Falcon 3: A Family of Open-Source AI Models with 30 New Model Checkpoints (1B to 10B). Retrieved from marktechpost.com

Falcon LLMs. (2024, December 17). Falcon 3: Open-Source Large Language Models. Retrieved from falconllm.tii.ae

BusinessWire. (2024, December 17). Falcon 3: UAE’s Technology Innovation Institute Launches the World’s Most Powerful Small AI Models. Retrieved from businesswire.com

Press contact

Timon Harz

oneboardhq@outlook.com

Other posts

Company

About

Blog

Careers

Press

Legal

Privacy

Terms

Security