Timon Harz

December 18, 2024

Self-Calibrating Conformal Prediction for Improved Reliability and Uncertainty Quantification in Regression Tasks

Self-Calibrating Conformal Prediction (SC-CP) revolutionizes model calibration by improving prediction accuracy and interval efficiency, particularly in challenging real-world applications. This method addresses the balance between model calibration, uncertainty quantification, and computational efficiency, ensuring reliable outcomes for decision-making tasks.

In machine learning, reliable predictions and uncertainty quantification are essential, especially in safety-critical fields like healthcare. Model calibration ensures that predictions accurately represent true outcomes, reducing the risk of extreme over- or underestimations and fostering trust in decision-making. Conformal Prediction (CP), a model-agnostic and distribution-free approach, is widely used for uncertainty quantification. It generates prediction intervals that contain the true outcome with a user-defined probability. However, standard CP offers marginal coverage, averaging performance across different contexts. To achieve context-specific coverage, which accounts for particular decision-making scenarios, additional assumptions are often required. Consequently, researchers have explored methods to provide weaker but practical conditional validity, such as prediction-conditional coverage.

Recent developments in conditional validity and calibration have led to new techniques, such as Mondrian CP, which applies context-specific binning or regression trees to generate prediction intervals. These methods often lack adequately calibrated point predictions and self-calibrated intervals. SC-CP improves on these methods by using isotonic calibration to discretize the predictor adaptively, resulting in better conformity scores, calibrated predictions, and self-calibrated intervals. Further methods like Multivalid-CP and difficulty-aware CP refine prediction intervals by conditioning on factors like class labels or prediction set sizes. While techniques like Venn-Abers calibration and its regression extensions have been explored, challenges remain in balancing model accuracy, interval width, and conditional validity without increasing computational costs.

Researchers from the University of Washington, UC Berkeley, and UCSF have introduced Self-Calibrating Conformal Prediction (SC-CP), which combines Venn-Abers calibration with conformal prediction to deliver calibrated point predictions and prediction intervals with finite-sample validity, conditional on these predictions. By extending the Venn-Abers method from binary classification to regression tasks, this approach improves both prediction accuracy and interval efficiency. The SC-CP framework examines the relationship between model calibration and predictive inference, ensuring valid coverage while enhancing practical performance. Real-world experiments validate its effectiveness, offering a robust and efficient alternative to feature-conditional validity for decision-making tasks that require both point and interval predictions.

Self-Calibrating Conformal Prediction (SC-CP) enhances traditional CP by ensuring finite-sample validity and post-hoc applicability, achieving perfect calibration. It incorporates Venn-Abers calibration, an extension of isotonic regression, to produce calibrated predictions for regression tasks. Venn-Abers generates prediction sets that guarantee a perfectly calibrated point prediction by iteratively calibrating over imputed outcomes and leveraging isotonic regression. SC-CP then further conforms these predictions, constructing intervals around the calibrated outputs with quantifiable uncertainty. This method effectively balances calibration with predictive performance, particularly in small sample sizes, by addressing overfitting and uncertainty through adaptive intervals.

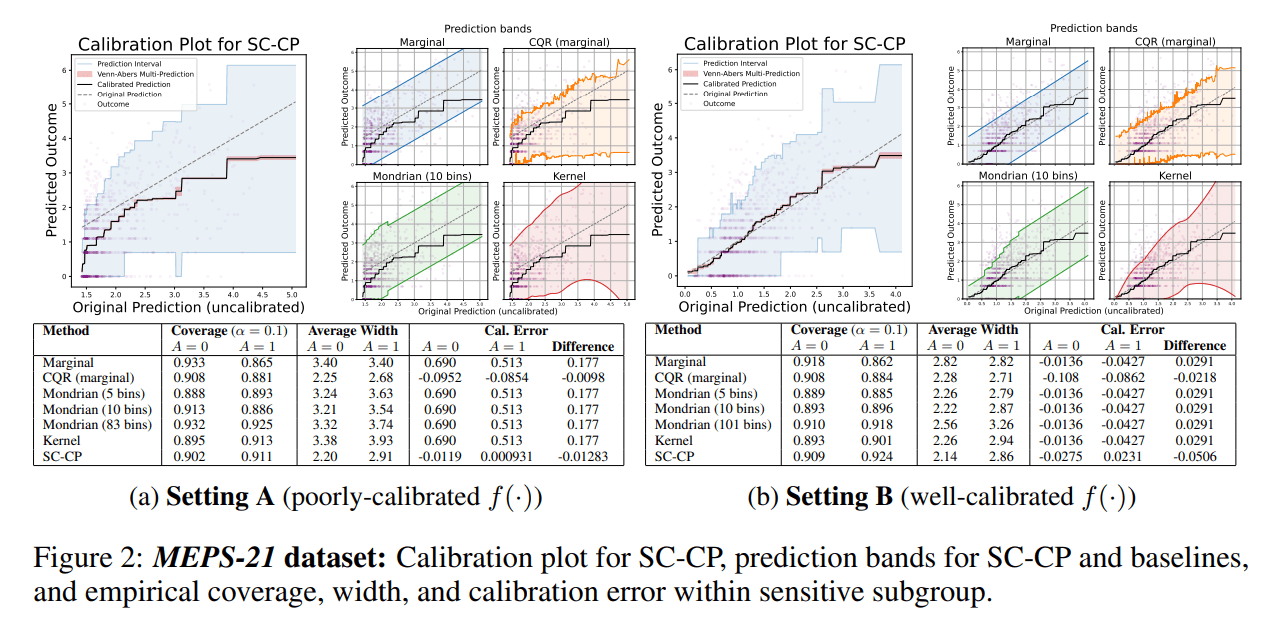

The MEPS dataset is used to predict healthcare utilization while assessing prediction-conditional validity across sensitive subgroups. It contains 15,656 samples with 139 features, including race as a sensitive attribute. The data is divided into training, calibration, and test sets, with XGBoost training the initial model under two conditions: poorly calibrated (untransformed outcomes) and well-calibrated (transformed outcomes). SC-CP is evaluated against Marginal, Mondrian, CQR, and Kernel methods. The results demonstrate that SC-CP produces narrower intervals, improved calibration, and more equitable predictions, reducing calibration errors in sensitive subgroups. Unlike the baseline methods, SC-CP effectively adapts to heteroscedasticity, achieving better coverage and interval efficiency.

In conclusion, SC-CP successfully combines Venn-Abers calibration with Conformal Prediction to provide calibrated point predictions and prediction intervals with finite-sample validity. By extending Venn-Abers calibration to regression tasks, SC-CP enhances prediction accuracy while improving interval efficiency and conditional coverage. Experimental results, especially on the MEPS dataset, demonstrate its ability to adapt to heteroscedasticity, produce narrower prediction intervals, and ensure fairness across subgroups. When compared to traditional methods, SC-CP offers a practical and computationally efficient solution, making it ideal for safety-critical applications that require reliable uncertainty quantification and trustworthy predictions.

Regression analysis is a fundamental statistical method used to examine the relationship between a dependent variable and one or more independent variables. In predictive modeling, it serves as a cornerstone for forecasting future outcomes based on historical data. By identifying patterns and associations within the data, regression models enable the prediction of continuous outcomes, making them invaluable across various fields such as finance, healthcare, and engineering.

The primary objective of regression analysis is to establish an equation that best represents the relationship between variables. This equation can then be utilized to predict the value of the dependent variable for new, unseen data. For instance, in finance, regression models can predict stock prices based on historical performance and economic indicators. In healthcare, they might forecast patient outcomes based on treatment variables and demographic information.

A common approach within regression analysis is linear regression, which assumes a straight-line relationship between the dependent and independent variables. This simplicity makes it a widely used technique for predictive modeling. However, when the relationship is more complex, nonlinear regression models are employed to capture the intricacies of the data.

In summary, regression analysis is a vital tool in predictive modeling, offering a systematic approach to understanding and forecasting relationships between variables. Its versatility and applicability across diverse domains underscore its importance in data-driven decision-making processes.

Ensuring reliable predictions and effectively quantifying uncertainty are critical challenges in regression modeling. Regression models aim to predict continuous outcomes based on input variables, but several factors can compromise their reliability and the accuracy of uncertainty estimates.

One significant challenge is overfitting, where a model captures noise in the training data rather than the underlying relationship between variables. This results in poor generalization to new, unseen data, leading to unreliable predictions. To mitigate overfitting, it's essential to use appropriate model complexity and employ techniques like cross-validation to assess model performance on independent datasets.

Another issue is multicollinearity, which occurs when independent variables in a regression model are highly correlated. This correlation can make it difficult to determine the individual effect of each variable on the dependent variable, leading to unstable coefficient estimates and unreliable predictions. Detecting and addressing multicollinearity is crucial for improving model reliability.

Additionally, model uncertainty arises from the inherent limitations of the model itself, such as incorrect assumptions or simplifications. This uncertainty can lead to inaccurate predictions and unreliable uncertainty quantification. Incorporating uncertainty quantification methods, such as bootstrapping or Bayesian approaches, can help assess and account for model uncertainty, providing more reliable predictions.

Furthermore, distribution shifts—changes in the data distribution over time or between training and testing phases—can affect the reliability of regression models. Models trained on one distribution may perform poorly when applied to data from a different distribution, leading to unreliable predictions. Regularly updating models and employing techniques that account for distribution shifts are essential for maintaining prediction reliability.

In summary, addressing challenges like overfitting, multicollinearity, model uncertainty, and distribution shifts is vital for ensuring the reliability of regression models and accurately quantifying uncertainty in their predictions.

Understanding Conformal Prediction

Conformal prediction is a statistical framework designed to quantify uncertainty in predictive models by generating prediction intervals that are valid under minimal assumptions. Unlike traditional methods that rely on specific distributional assumptions, conformal prediction offers a distribution-free approach, making it versatile across various applications.

The primary purpose of conformal prediction is to provide reliable measures of uncertainty for individual predictions. By calculating nonconformity scores based on past data, it determines how well new observations conform to the existing data distribution. This process results in prediction sets or intervals that contain the true outcome with a user-specified probability, ensuring that the model's predictions are both accurate and trustworthy.

This methodology is particularly advantageous in fields where understanding the reliability of predictions is crucial, such as healthcare, finance, and engineering. For instance, in medical diagnostics, conformal prediction can provide intervals indicating the likelihood of a disease's presence, aiding clinicians in making informed decisions.

Conformal prediction is a statistical framework that enhances the reliability of predictive models by generating prediction intervals or sets with guaranteed coverage probabilities. This approach is distribution-free, meaning it doesn't rely on specific assumptions about the underlying data distribution, making it versatile across various applications.

The process begins by applying a predictive model to a training dataset to make predictions. For each prediction, a nonconformity score is calculated, which measures how different or "nonconforming" the predicted value is from the actual observed value. These scores are then used to establish a threshold that defines the acceptable level of nonconformity.

When making a prediction for a new, unseen instance, the model generates a prediction set or interval. This set includes all possible outcomes whose nonconformity scores are below the established threshold, ensuring that the true outcome is contained within the prediction set with a specified probability. This method provides a statistically valid measure of uncertainty for individual predictions, offering more informative insights than point estimates alone.

By leveraging past data to determine precise levels of confidence in new predictions, conformal prediction enables the creation of prediction sets that contain the true outcome with a user-specified probability, typically 1 minus the error probability. This approach is applicable to various predictive models, including nearest-neighbor methods, support vector machines, and regression models, thereby enhancing the reliability and interpretability of their predictions.

The Concept of Self-Calibrating Conformal Prediction

Self-calibration in the context of conformal prediction refers to the process of adjusting a predictive model's outputs to enhance their reliability and accuracy. This adjustment is achieved by applying calibration techniques that modify the model's predictions to better align with observed outcomes. In regression tasks, self-calibration is particularly valuable as it ensures that the prediction intervals generated by the model are both valid and informative.

A notable method for self-calibration is the Venn-Abers calibration, which extends the concept of isotonic regression to provide calibrated point predictions. This approach iteratively refines the model's predictions by leveraging imputed outcomes, resulting in prediction sets that are guaranteed to include the true outcome with a specified probability. By integrating Venn-Abers calibration with conformal prediction, the model can produce prediction intervals that are conditionally valid, offering a robust framework for uncertainty quantification in regression tasks.

Self-calibrating conformal prediction enhances the reliability of predictive models by integrating Venn-Abers calibration with conformal prediction techniques. This combination ensures that both point predictions and their associated intervals are calibrated and valid under minimal assumptions.

Venn-Abers calibration, an extension of isotonic regression, refines model outputs to produce calibrated point predictions. In regression tasks, this method iteratively adjusts predictions by leveraging imputed outcomes, resulting in prediction sets that are guaranteed to include the true outcome with a specified probability. By applying Venn-Abers calibration, the model's point predictions become more accurate and reliable.

Conformal prediction complements this by constructing prediction intervals that are valid under minimal assumptions. It assesses the conformity of new data points to the existing data distribution, generating intervals that contain the true outcome with a user-specified probability. This approach provides a distribution-free method for uncertainty quantification, making it versatile across various applications.

By combining these methods, self-calibrating conformal prediction delivers calibrated point predictions alongside prediction intervals with finite-sample validity conditional on these predictions. This integration enhances the model's reliability and the accuracy of uncertainty quantification in regression tasks.

Advantages in Regression Tasks

Self-calibrating conformal prediction enhances the reliability of predictive models by integrating Venn-Abers calibration with conformal prediction techniques. This combination ensures that both point predictions and their associated intervals are calibrated and valid under minimal assumptions.

Venn-Abers calibration, an extension of isotonic regression, refines model outputs to produce calibrated point predictions. In regression tasks, this method iteratively adjusts predictions by leveraging imputed outcomes, resulting in prediction sets that are guaranteed to include the true outcome with a specified probability. By applying Venn-Abers calibration, the model's point predictions become more accurate and reliable.

Conformal prediction complements this by constructing prediction intervals that are valid under minimal assumptions. It assesses the conformity of new data points to the existing data distribution, generating intervals that contain the true outcome with a user-specified probability. This approach provides a distribution-free method for uncertainty quantification, making it versatile across various applications.

By combining these methods, self-calibrating conformal prediction delivers calibrated point predictions alongside prediction intervals with finite-sample validity conditional on these predictions. This integration enhances the model's reliability and the accuracy of uncertainty quantification in regression tasks.

Self-calibrating conformal prediction enhances uncertainty quantification in regression outcomes by integrating Venn-Abers calibration with conformal prediction techniques. This combination ensures that both point predictions and their associated intervals are calibrated and valid under minimal assumptions.

Venn-Abers calibration, an extension of isotonic regression, refines model outputs to produce calibrated point predictions. In regression tasks, this method iteratively adjusts predictions by leveraging imputed outcomes, resulting in prediction sets that are guaranteed to include the true outcome with a specified probability. By applying Venn-Abers calibration, the model's point predictions become more accurate and reliable.

Conformal prediction complements this by constructing prediction intervals that are valid under minimal assumptions. It assesses the conformity of new data points to the existing data distribution, generating intervals that contain the true outcome with a user-specified probability. This approach provides a distribution-free method for uncertainty quantification, making it versatile across various applications.

By combining these methods, self-calibrating conformal prediction delivers calibrated point predictions alongside prediction intervals with finite-sample validity conditional on these predictions. This integration enhances the model's reliability and the accuracy of uncertainty quantification in regression tasks.

Practical Applications

Self-calibrating conformal prediction has been successfully applied in various real-world scenarios, demonstrating its effectiveness in enhancing the reliability and uncertainty quantification of predictive models.

In industrial settings, Husqvarna Group utilized conformal prediction frameworks to address regression problems. By applying conformal prediction methods, they were able to improve the accuracy and reliability of their predictive models, leading to more informed decision-making processes.

Additionally, a study published on arXiv discusses the integration of Venn-Abers calibration with conformal prediction to provide calibrated point predictions and associated prediction intervals with finite-sample validity. This approach enhances the reliability of predictive models by ensuring that the prediction intervals contain the true outcome with a specified probability, even in finite samples.

These examples highlight the practical applications of self-calibrating conformal prediction in real-world scenarios, showcasing its potential to improve the reliability and uncertainty quantification of predictive models across various domains.

Self-calibrating conformal prediction offers significant advantages across various industries, including healthcare, finance, and engineering, by enhancing the reliability and uncertainty quantification of predictive models.

In healthcare, accurate predictions are crucial for patient outcomes. By integrating self-calibrating conformal prediction, medical professionals can obtain calibrated point predictions alongside prediction intervals with finite-sample validity. This approach ensures that the true outcome is contained within the prediction interval with a specified probability, thereby improving decision-making processes.

In the finance sector, where risk assessment and management are vital, self-calibrating conformal prediction enhances the reliability of financial models. By providing calibrated predictions and associated intervals, financial analysts can better quantify uncertainty, leading to more informed investment decisions and improved risk management strategies.

In engineering, particularly in predictive maintenance and quality control, self-calibrating conformal prediction improves the accuracy of models used to predict equipment failures or product defects. By delivering reliable uncertainty quantification, engineers can make more informed decisions regarding maintenance schedules and quality assurance processes, thereby reducing downtime and enhancing product quality.

In summary, the integration of self-calibrating conformal prediction across these industries leads to more dependable predictions and better uncertainty quantification, thereby enhancing decision-making processes and operational efficiency.

Implementation and Tools

Self-calibrating conformal prediction has been implemented in several software libraries, facilitating its application across various domains.

One notable implementation is the Python package "SelfCalibratingConformal," which provides tools for combining Venn-Abers calibration with conformal prediction. This package enables users to generate calibrated point predictions and prediction intervals with finite-sample validity. The repository includes code to reproduce experiments from the associated NeurIPS 2024 paper, offering practical examples for users.

Another relevant resource is the "PUNCC" (Predictive UNcertainty Calibration and Conformalization) library. This open-source Python library integrates state-of-the-art conformal prediction methods, including self-calibrating techniques, to provide predictive uncertainty quantification. PUNCC offers a collection of tools for model calibration and uncertainty estimation, supporting various machine learning applications.

Additionally, the "awesome-conformal-prediction" repository on GitHub curates a comprehensive list of resources related to conformal prediction, including videos, tutorials, books, papers, and open-source libraries. This compilation serves as a valuable starting point for exploring existing tools and implementations in the field.

These resources provide practical tools and frameworks for implementing self-calibrating conformal prediction, enabling researchers and practitioners to enhance the reliability and uncertainty quantification of their predictive models.

To implement self-calibrating conformal prediction in your projects, follow these steps:

Understand the Fundamentals: Familiarize yourself with the principles of conformal prediction and Venn-Abers calibration. A comprehensive tutorial is available in the paper "A Tutorial on Conformal Prediction," which provides a self-contained account of the theory and practical examples.

Set Up Your Environment: Ensure you have Python installed. You can download it from the official Python website.

Install the Necessary Package: Use pip to install the

SelfCalibratingConformalpackage, which provides a Python implementation of self-calibrating conformal prediction. This package includes code to reproduce experiments from the associated NeurIPS 2024 paper.Explore the Example Code: The package includes a

vignette.ipynbfile demonstrating the use of self-calibrating conformal prediction. This notebook guides you through model calibration, generating prediction intervals, and evaluating model coverage.Integrate into Your Project: Adapt the example code to fit your specific dataset and modeling requirements. Ensure that your data is appropriately preprocessed and that the model is trained before applying the self-calibrating conformal prediction method.

Evaluate and Interpret Results: After implementing the method, assess the reliability and uncertainty quantification of your predictions. Compare the performance of your model with and without the self-calibrating conformal prediction to understand its impact.

By following these steps, you can effectively incorporate self-calibrating conformal prediction into your projects, enhancing the reliability and uncertainty quantification of your predictive models.

Conclusion

Self-calibrating conformal prediction enhances the reliability and uncertainty quantification of predictive models by integrating Venn-Abers calibration with conformal prediction techniques. This combination ensures that both point predictions and their associated intervals are calibrated and valid under minimal assumptions.

In healthcare, this method improves patient outcome predictions by providing calibrated point predictions and prediction intervals with finite-sample validity. This approach ensures that the true outcome is contained within the prediction interval with a specified probability, thereby improving decision-making processes.

In finance, self-calibrating conformal prediction enhances the reliability of financial models by providing calibrated predictions and associated intervals. Financial analysts can better quantify uncertainty, leading to more informed investment decisions and improved risk management strategies.

In engineering, particularly in predictive maintenance and quality control, this method improves the accuracy of models used to predict equipment failures or product defects. By delivering reliable uncertainty quantification, engineers can make more informed decisions regarding maintenance schedules and quality assurance processes, thereby reducing downtime and enhancing product quality.

Implementing self-calibrating conformal prediction is facilitated by Python packages such as "SelfCalibratingConformal," which provides tools for combining Venn-Abers calibration with conformal prediction. This package enables users to generate calibrated point predictions and prediction intervals with finite-sample validity.

Self-calibrating conformal prediction is an evolving field with promising avenues for future research and development. One significant direction is the extension of conformal prediction methods to handle more complex data structures, such as time-series and spatial data. This expansion would enable the application of self-calibrating conformal prediction in areas like financial forecasting and geospatial analysis, where uncertainty quantification is crucial.

Another promising area is the integration of self-calibrating conformal prediction with advanced machine learning models, including deep learning architectures. This integration could enhance the reliability and uncertainty quantification of predictions in complex models, thereby improving their applicability in critical domains like healthcare and autonomous systems.

Additionally, there is potential for developing more efficient algorithms to reduce the computational burden associated with conformal prediction methods. Improving computational efficiency would make these methods more accessible for real-time applications and large-scale data analysis.

Furthermore, exploring the combination of self-calibrating conformal prediction with other uncertainty quantification techniques could lead to more robust and reliable predictive models. Such interdisciplinary approaches may offer enhanced performance in various applications, including natural language processing and image analysis.

In summary, the future of self-calibrating conformal prediction holds significant promise, with ongoing research aimed at broadening its applicability, improving computational efficiency, and integrating it with other advanced methodologies to enhance predictive reliability and uncertainty quantification across diverse fields.

Press contact

Timon Harz

oneboardhq@outlook.com

Other posts

Company

About

Blog

Careers

Press

Legal

Privacy

Terms

Security