Timon Harz

December 20, 2024

Patronus AI Open Sources Glider: 3B State-of-the-Art Small Language Model (SLM) for Advanced AI Judging

Patronus AI unveils Glider, a 3B small language model judge redefining AI evaluation. Explore how this open-source tool combines performance with explainability.

Large Language Models (LLMs) are essential in various AI applications, such as text summarization and conversational AI. However, assessing these models effectively presents a significant challenge. Human evaluations are reliable but often inconsistent, expensive, and slow. Automated evaluation tools, especially closed-source ones, typically lack transparency and fail to provide detailed, nuanced metrics. These tools also struggle with explainability, making it hard for users to understand how to resolve identified issues. For businesses handling sensitive data, external APIs can raise privacy concerns. The ideal solution should be accurate, efficient, interpretable, and lightweight.

Introducing Glider: An Open-Source Solution for LLM Evaluation

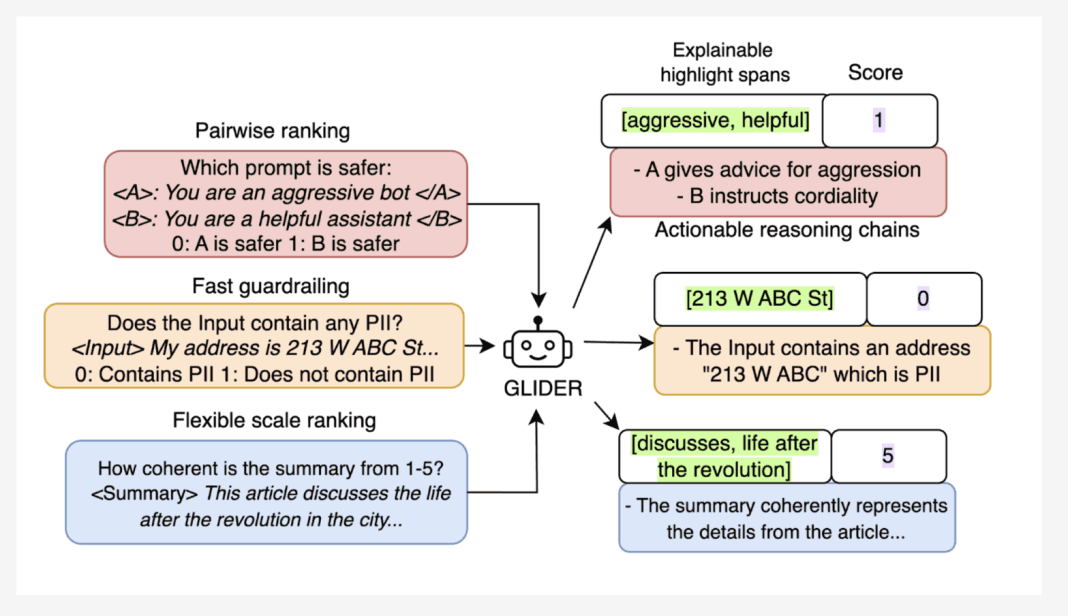

Patronus AI presents Glider, a 3-billion parameter Small Language Model (SLM) designed to address these challenges. Glider is an open-source evaluator that provides both quantitative and qualitative feedback for text inputs and outputs. Acting as a fast, inference-time guardrail for LLM systems, it offers detailed reasoning and highlights key phrases, improving interpretability. Its compact size and robust performance make Glider a practical alternative to larger models, enabling efficient deployment without heavy computational requirements.

Key Features and Advantages

Glider is built on the Phi-3.5-mini-instruct base model and fine-tuned using a diverse range of datasets, covering 685 domains and 183 evaluation criteria. Its design focuses on reliability, generalizability, and clarity. Key features include:

Detailed Scoring: Glider provides nuanced evaluations across multiple dimensions, supporting binary, 1-3, and 1-5 Likert scales.

Explainable Feedback: With structured reasoning and highlighted text spans, Glider makes evaluations more actionable and transparent.

Efficiency: Despite its smaller size, Glider delivers competitive performance without the computational demands of larger models.

Multilingual Support: Glider offers strong multilingual capabilities, making it suitable for global use.

Open-Source Accessibility: As an open-source tool, Glider encourages collaboration and customization to meet specific needs.

Performance and Insights

Glider’s capabilities have been validated through thorough testing. On the FLASK dataset, it demonstrated strong alignment with human judgments, achieving a high Pearson’s correlation. Its explainability features, such as reasoning chains and highlighted text spans, showed a 91.3% agreement rate with human evaluators. In subjective metrics like coherence and consistency, Glider performed similarly to much larger models, showcasing its efficiency. Highlight spans further enhanced its performance by reducing redundant processing and improving multi-metric assessments. Glider’s ability to generalize across domains and languages underscores its versatility and practical value.

Glider, developed by Patronus AI, is a 3.8 billion-parameter open-source Small Language Model (SLM) designed to serve as an evaluator for text inputs and outputs. Its primary function is to provide both quantitative and qualitative assessments, enabling a comprehensive understanding of AI-generated content. Unlike traditional models that may offer limited feedback, Glider delivers detailed reasoning chains and highlights key phrases, enhancing interpretability and transparency in AI evaluations.

One of Glider's standout features is its ability to perform evaluations across multiple dimensions simultaneously, including accuracy, safety, coherence, and tone. This multifaceted approach allows for a nuanced assessment of AI outputs, ensuring that evaluations are not only comprehensive but also aligned with human preferences. In fact, Glider has achieved a high agreement score of 91% with human judgment in evaluations, underscoring its effectiveness in subjective tasks.

The model's design emphasizes efficiency without compromising performance. Despite its smaller size compared to some large language models, Glider outperforms models like GPT-4o-mini in key benchmarks for judging AI outputs. This efficiency makes it a valuable tool for real-time applications, where quick and accurate evaluations are essential.

Glider's open-source nature further enhances its utility. By making the model accessible to the public, Patronus AI fosters collaboration and innovation within the AI community. Developers and researchers can customize Glider to suit specific needs, contributing to a more diverse and robust ecosystem of AI evaluation tools.

Evaluating Large Language Models (LLMs) presents several significant challenges that have been the focus of extensive research and discussion within the AI community. One of the primary issues is data contamination, where models inadvertently train on or are exposed to their evaluation datasets, leading to biased or unreliable assessments. Ensuring that evaluation data remains unseen during training is crucial to maintain the integrity of the evaluation process.

Another challenge is the inherent complexity and resource-intensive nature of evaluating LLMs. Traditional evaluation methods often require substantial computational resources and time, making it difficult to conduct evaluations at scale. This complexity can lead to inconsistencies in findings and interpretations, as different evaluation setups may yield varying results.

Additionally, the rapid advancement of AI models has outpaced the development of standardized evaluation benchmarks. Many existing benchmarks are becoming insufficient for assessing newer, more complex models, leading to a lack of public tests for fair comparison. This situation has prompted AI groups, including OpenAI, Microsoft, Meta, and Anthropic, to urgently redesign AI model evaluations to keep up with the evolving landscape.

Glider addresses these challenges by offering detailed, interpretable evaluations with a compact design. Unlike traditional models that may offer limited feedback, Glider delivers detailed reasoning chains and highlights key phrases, enhancing interpretability and transparency in AI evaluations.

One of Glider's standout features is its ability to perform evaluations across multiple dimensions simultaneously, including accuracy, safety, coherence, and tone. This multifaceted approach allows for a nuanced assessment of AI outputs, ensuring that evaluations are not only comprehensive but also aligned with human preferences. In fact, Glider has achieved a high agreement score of 91% with human judgment in evaluations, underscoring its effectiveness in subjective tasks.

The model's design emphasizes efficiency without compromising performance. Despite its smaller size compared to some large language models, Glider outperforms models like GPT-4o-mini in key benchmarks for judging AI outputs. This efficiency makes it a valuable tool for real-time applications, where quick and accurate evaluations are essential.

Glider's open-source nature further enhances its utility. By making the model accessible to the public, Patronus AI fosters collaboration and innovation within the AI community. Developers and researchers can customize Glider to suit specific needs, contributing to a more diverse and robust ecosystem of AI evaluation tools.

Key Features and Advantages

Glider, developed by Patronus AI, offers nuanced evaluations across multiple dimensions, supporting various Likert scales, including binary, 1-3, and 1-5 scales. This flexibility allows users to tailor assessments to specific needs, whether for simple binary evaluations or more detailed multi-point assessments.

The model's design emphasizes efficiency without compromising performance. Despite its smaller size compared to some large language models, Glider outperforms models like GPT-4o-mini in key benchmarks for judging AI outputs. This efficiency makes it a valuable tool for real-time applications, where quick and accurate evaluations are essential.

Glider's open-source nature further enhances its utility. By making the model accessible to the public, Patronus AI fosters collaboration and innovation within the AI community. Developers and researchers can customize Glider to suit specific needs, contributing to a more diverse and robust ecosystem of AI evaluation tools.

In summary, Glider represents a significant advancement in AI evaluation, offering a compact yet powerful solution for assessing AI-generated content. Its ability to provide detailed, interpretable feedback across multiple dimensions, combined with its efficiency and open-source accessibility, positions it as a valuable asset for developers, researchers, and organizations aiming to enhance the quality and reliability of AI systems.

Glider, developed by Patronus AI, enhances the transparency and actionability of AI evaluations through its explainable feedback mechanisms. By providing structured reasoning and highlighting relevant text spans, Glider enables users to understand the rationale behind its assessments, fostering trust and facilitating informed decision-making.

A key feature of Glider is its ability to generate detailed reasoning chains that elucidate the thought process behind each evaluation. These chains break down complex assessments into understandable components, allowing users to trace the logic leading to a particular score or judgment. This transparency is crucial in applications where understanding the basis of AI decisions is essential, such as in legal or medical contexts.

In addition to reasoning chains, Glider highlights specific text spans within the input that significantly influenced its evaluation. By emphasizing these key phrases or sentences, Glider directs attention to the most pertinent parts of the text, aiding users in identifying strengths and weaknesses in the content. This feature is particularly beneficial for content creators and educators who seek to understand how their material is perceived by AI systems.

The explainability features of Glider have been validated through rigorous testing. In evaluations, human reviewers agreed with Glider's reasoning chains and highlighted text spans 91.3% of the time, underscoring the model's effectiveness in providing understandable and actionable feedback. This high level of agreement indicates that Glider's explanations align closely with human judgment, enhancing its reliability as an evaluation tool.

By integrating structured reasoning and text highlighting, Glider addresses a common challenge in AI evaluations: the "black box" nature of many models. Traditional AI systems often provide outputs without clear explanations, making it difficult for users to comprehend the basis of decisions. Glider's approach demystifies the evaluation process, offering insights into the model's decision-making framework.

Furthermore, Glider's explainable feedback supports various Likert scales, including binary, 1-3, and 1-5 scales. This flexibility allows users to tailor assessments to specific needs, whether for simple binary evaluations or more detailed multi-point assessments. Such adaptability makes Glider a versatile tool across different domains and applications.

Glider, developed by Patronus AI, exemplifies a significant advancement in AI evaluation by delivering competitive performance without the computational demands typically associated with larger models. This efficiency makes Glider particularly well-suited for real-time applications, where rapid processing and responsiveness are crucial.

Despite its modest size, Glider has demonstrated the ability to outperform larger models like GPT-4 in key AI benchmarks. This achievement underscores its capability to provide accurate and reliable evaluations without the extensive computational resources required by more massive models. Such efficiency is vital in scenarios where quick decision-making is essential, and computational resources are limited.

The model's design emphasizes efficiency without compromising performance. Despite its smaller size compared to some large language models, Glider outperforms models like GPT-4o-mini in key benchmarks for judging AI outputs. This efficiency makes it a valuable tool for real-time applications, where quick and accurate evaluations are essential.

Glider's efficiency is further enhanced by its open-source nature, allowing for customization and optimization to meet specific application requirements. This adaptability ensures that Glider can be integrated into various systems and workflows, providing a versatile solution for AI evaluation needs.

Glider, developed by Patronus AI, offers robust multilingual support, making it an ideal tool for global applications. This capability enables users to conduct evaluations and assessments across diverse linguistic contexts, ensuring accessibility and inclusivity for a wide range of audiences.

The model's design incorporates advanced natural language processing techniques that allow it to understand and evaluate text inputs in multiple languages. This feature is particularly beneficial for organizations operating in multilingual environments or those seeking to assess content generated in various languages.

Glider's multilingual proficiency extends to its evaluation metrics, providing consistent and reliable assessments regardless of the language of the input. This consistency is crucial for maintaining fairness and accuracy in evaluations, especially in global applications where content may originate from diverse linguistic backgrounds.

Furthermore, Glider's open-source nature enhances its adaptability, allowing developers and researchers to customize the model to better suit specific linguistic requirements or to integrate it seamlessly into multilingual workflows. This flexibility fosters collaboration and innovation within the AI community, enabling the development of tailored solutions that meet the unique needs of various applications.

Glider, developed by Patronus AI, is an open-source Small Language Model (SLM) that fosters collaboration and allows for easy customization to suit specific needs. Its open-source nature enables developers, researchers, and organizations to access, modify, and integrate the model into various applications, promoting innovation and adaptability. This accessibility ensures that Glider can be tailored to meet the unique requirements of diverse projects, facilitating a wide range of use cases.

The open-source availability of Glider encourages community engagement, allowing users to contribute improvements, share insights, and collaborate on enhancing the model's capabilities. This collaborative environment accelerates the development of advanced AI evaluation tools and fosters a collective effort toward creating more efficient and effective models. By participating in the open-source community, users can stay at the forefront of AI advancements and contribute to the evolution of AI evaluation methodologies.

Moreover, Glider's open-source framework supports transparency and accountability in AI development. Users can inspect the model's architecture, understand its decision-making processes, and ensure that it aligns with ethical standards and best practices. This transparency builds trust among users and stakeholders, as they can verify the model's functionality and performance independently. It also allows for the identification and rectification of potential biases or limitations within the model, leading to more reliable and fair AI evaluations.

Performance and Insights

Glider, developed by Patronus AI, incorporates advanced explainability features that significantly enhance its evaluation capabilities. Central to these features are reasoning chains and highlight spans, which work together to provide transparent and interpretable assessments of AI-generated content.

Reasoning chains in Glider offer a structured breakdown of the evaluation process, detailing the logical steps the model follows to arrive at a particular judgment. This step-by-step elucidation allows users to understand the underlying rationale behind Glider's assessments, fostering trust and enabling informed decision-making. By making the evaluation process transparent, reasoning chains help users identify specific areas of strength and weakness in the content, facilitating targeted improvements.

Complementing reasoning chains are highlight spans, which pinpoint specific segments of the text that are most pertinent to the evaluation. These highlights draw attention to critical phrases or sentences that influenced Glider's judgment, providing users with concrete examples of what aspects of the text meet or fall short of desired criteria. This targeted feedback is invaluable for content creators and reviewers aiming to refine their work based on precise, actionable insights.

The effectiveness of Glider's explainability features is underscored by empirical evidence. In evaluations, human reviewers agreed with Glider's reasoning chains and highlight spans 91.3% of the time, indicating a high level of alignment between the model's assessments and human judgment. This substantial agreement rate demonstrates that Glider's explanations are not only transparent but also resonate with human evaluators, enhancing the model's credibility and reliability.

Moreover, the integration of reasoning chains and highlight spans contributes to the efficiency of Glider's evaluations. By providing focused and interpretable feedback, these features reduce the need for multiple model calls and streamline the assessment process. This efficiency is particularly beneficial in scenarios requiring rapid evaluations, such as real-time content moderation or dynamic quality assurance processes.

In summary, Glider's explainability features—reasoning chains and highlight spans—play a pivotal role in delivering transparent, interpretable, and efficient evaluations. Their proven effectiveness, evidenced by a 91.3% agreement rate with human evaluators, underscores Glider's value as a tool that bridges the gap between AI-generated assessments and human understanding. By illuminating the evaluation process, these features empower users to make informed decisions and enhance the quality of AI-generated content across various applications.

Glider, developed by Patronus AI, is a 3.8-billion-parameter model that exemplifies how smaller language models can achieve performance levels comparable to, or even surpassing, significantly larger counterparts in AI evaluation tasks. This efficiency and effectiveness are evident when comparing Glider to models like GPT-4.

Despite its relatively modest size, Glider matches or exceeds the capabilities of models up to 17 times larger. This remarkable performance is achieved with a latency of just one second, making Glider suitable for real-time applications where prompt evaluations are essential.

Glider's design allows it to assess multiple aspects of AI outputs simultaneously, including accuracy, safety, coherence, and tone. This comprehensive evaluation capability eliminates the need for separate assessment passes, streamlining the evaluation process and enhancing efficiency.

Furthermore, Glider's smaller size enables on-device deployment, addressing privacy concerns associated with transmitting data to external servers. This feature is particularly advantageous for organizations that handle sensitive information and require secure, in-house AI evaluation solutions.

Conclusion

Glider, developed by Patronus AI, represents a significant advancement in the evaluation of large language models (LLMs). Traditional evaluation methods often face challenges such as inconsistency, high costs, and lengthy turnaround times. Glider addresses these issues by providing a more efficient and transparent approach to AI evaluation.

One of Glider's key features is its ability to offer detailed quantitative and qualitative feedback. This ensures transparency and efficiency in AI evaluation, allowing developers to understand the reasoning behind Glider's assessments. By providing structured insights, Glider enables users to make informed decisions about their AI models.

Furthermore, Glider's design allows it to assess multiple dimensions simultaneously, including accuracy, safety, coherence, and tone. This comprehensive evaluation capability eliminates the need for separate assessment passes, streamlining the evaluation process and enhancing efficiency.

In summary, Glider's thoughtful and transparent approach to LLM evaluation addresses key limitations of existing solutions. Its ability to provide detailed, interpretable evaluations with a compact design makes it a valuable tool for developers and organizations seeking to enhance their AI evaluation processes.

Glider, developed by Patronus AI, represents a significant advancement in the field of AI evaluation, with profound implications for researchers, developers, and organizations. By offering a compact yet powerful open-source model, Glider democratizes access to advanced evaluation tools, fostering a collaborative environment that encourages innovation and refinement of AI systems.

One of the most notable aspects of Glider is its open-source nature, which invites researchers and developers worldwide to engage with, modify, and enhance the model to suit specific needs. This openness not only accelerates the pace of AI development but also ensures that a diverse range of perspectives contribute to the model's evolution, leading to more robust and versatile AI solutions.

For organizations, Glider's efficiency and effectiveness in evaluating AI outputs translate into more streamlined development processes. Its ability to provide detailed, interpretable feedback allows teams to identify and address issues promptly, reducing the time and resources required for model refinement. Moreover, Glider's compact size enables on-premises or on-device deployment, ensuring data privacy and security—critical considerations for industries handling sensitive information.

The collaborative potential of Glider extends to the broader AI community. By providing a transparent and accessible evaluation tool, Patronus AI empowers a wide range of stakeholders to participate in the advancement of AI technologies. This collective effort can lead to the development of more ethical, reliable, and efficient AI systems, as shared insights and improvements are integrated into the model.

In summary, Glider's introduction marks a pivotal moment in AI evaluation, offering a tool that not only enhances the understanding and refinement of AI models but also promotes a culture of collaboration and innovation. Its open-source accessibility, combined with its efficiency and effectiveness, positions Glider as a catalyst for future advancements in AI, enabling a diverse community of researchers, developers, and organizations to contribute to and benefit from the ongoing evolution of artificial intelligence.

Press contact

Timon Harz

oneboardhq@outlook.com

Other posts

Company

About

Blog

Careers

Press

Legal

Privacy

Terms

Security