Timon Harz

December 23, 2024

OPENAI O3 BREAKTHROUGH HIGH SCORE ON ARC-AGI-PUB

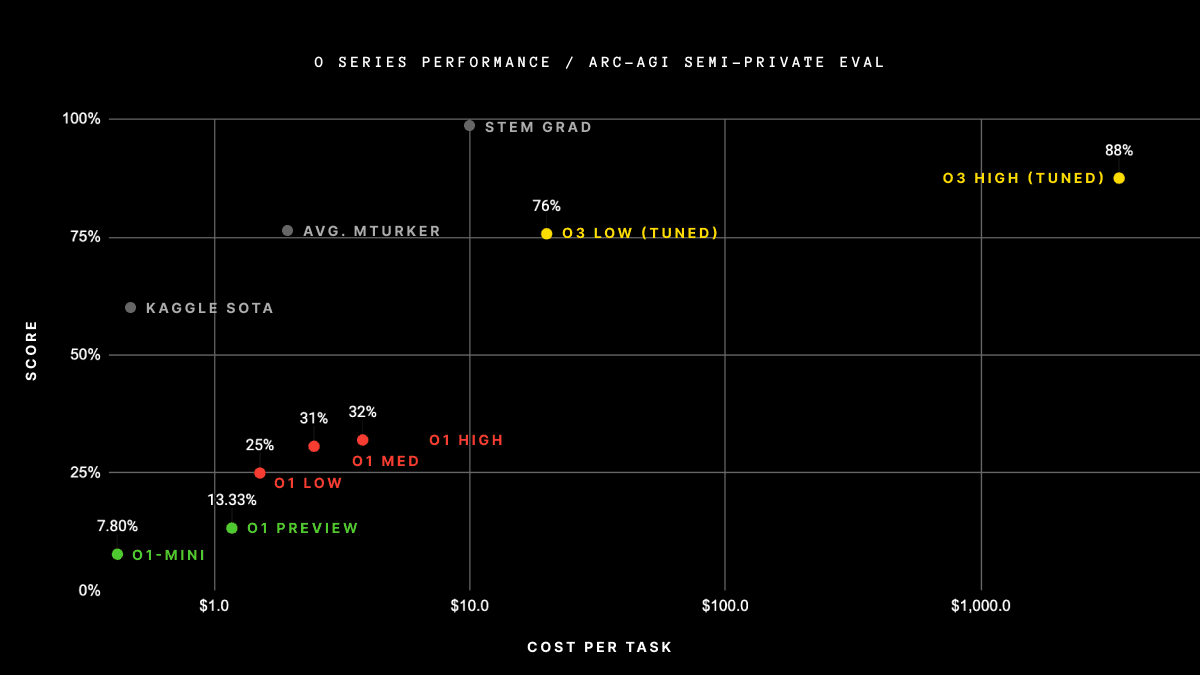

OpenAI's new o3 system - trained on the ARC-AGI-1 Public Training set - has scored a breakthrough 75.7% on the Semi-Private Evaluation set at our stated public leaderboard $10k compute limit. A high-compute (172x) o3 configuration scored 87.5%.

This marks a significant and unexpected leap in AI capabilities, showcasing a level of task adaptation never before seen in GPT models. To put this in perspective, it took ARC-AGI-1 four years to advance from 0% with GPT-3 in 2020 to 5% with GPT-4o in 2024. With o3, all prior assumptions about AI capabilities will need to be re-evaluated.

The ARC Prize mission extends beyond the first benchmark; it serves as a guiding star toward AGI. We are thrilled to collaborate with OpenAI and others next year to develop next-generation AGI benchmarks that endure.

ARC-AGI-2 (maintaining the same format—easy for humans, harder for AI) will debut alongside ARC Prize 2025. We are dedicated to continuing the Grand Prize competition until an efficient, open-source solution scoring 85% is achieved.

Read on for the complete testing report.

OPENAI O3 ARC-AGI RESULTS

We evaluated o3 using two ARC-AGI datasets:

Semi-Private Eval: 100 private tasks to assess overfitting

Public Eval: 400 public tasks

Following OpenAI’s guidelines, we conducted tests at two compute levels with varying sample sizes: 6 (high-efficiency) and 1024 (low-efficiency, 172x compute).

Note: OpenAI has requested that we refrain from publishing high-compute costs. The high-compute setup used roughly 172x the compute of the low-compute configuration.

Given the variable inference budget, efficiency (e.g., compute cost) is now a required metric when reporting performance. We’ve documented both the total costs and the cost per task as an initial proxy for efficiency. As an industry, we will need to determine the best metric for tracking efficiency, but for now, cost provides a solid starting point.

The high-efficiency score of 75.7% fits within the ARC-AGI-Pub budget rules (costs <$10k) and qualifies for 1st place on the public leaderboard!

The low-efficiency score of 87.5% is significantly more expensive, but it demonstrates that performance on novel tasks improves with increased compute (at least to this level).

Despite the high cost per task, these numbers are not simply a result of applying brute-force compute to the benchmark. OpenAI’s new o3 model marks a significant leap in AI’s ability to adapt to novel tasks, representing a genuine breakthrough in capabilities compared to previous LLMs. o3 is capable of adapting to tasks it has never encountered before, approaching human-level performance in the ARC-AGI domain.

However, this generality comes at a high cost and is not yet economical: while it costs around $5 per task for a human to solve ARC-AGI tasks (based on our own testing), o3 requires $17–20 per task in low-compute mode. But we expect cost-performance to improve substantially over the coming months and years, with these capabilities becoming competitive with human performance in a short timeline.

o3’s improvement over the GPT series shows that architecture is crucial. Simply scaling up GPT-4 will not produce these results. Further progress requires new ideas.

IS IT AGI?

ARC-AGI serves as an important benchmark for detecting breakthroughs, particularly by highlighting generalization power in ways that less demanding benchmarks cannot. However, it’s important to remember that ARC-AGI is not an AGI test — as we’ve repeated many times this year. It’s a research tool focused on addressing the most challenging unsolved problems in AI, which it has done effectively for the past five years.

Passing ARC-AGI doesn’t equate to achieving AGI, and I don’t think o3 has reached AGI yet. It still struggles with some very simple tasks, showing clear differences from human intelligence.

Early data suggests that ARC-AGI-2 will still present a significant challenge to o3, potentially reducing its score to under 30% even at high compute (while a skilled human would still score over 95% with no training). This indicates that creating challenging, unsaturated benchmarks is still possible without relying on expert domain knowledge. You’ll know AGI has arrived when it becomes impossible to create tasks that are easy for humans but hard for AI.

WHAT'S DIFFERENT ABOUT O3 COMPARED TO OLDER MODELS?

Why does o3 score so much higher than o1? And why did o1 outperform GPT-4o in the first place? These results provide valuable insights into the ongoing quest for AGI.

My mental model for LLMs is that they act as repositories of vector programs. When prompted, they fetch the corresponding program and "execute" it on the input. LLMs store and operationalize millions of mini-programs through exposure to human-generated content.

This "memorize, fetch, apply" paradigm can achieve high levels of skill at specific tasks given sufficient training data, but it cannot adapt to novel situations or pick up new skills on the fly — there’s no fluid intelligence at play. This is demonstrated by LLMs' low performance on ARC-AGI, the benchmark designed to measure adaptability to novelty. GPT-3 scored 0, GPT-4 scored nearly 0, and GPT-4o reached 5%. Scaling these models to their limits didn’t get ARC-AGI scores anywhere near what brute-force enumeration could achieve years ago (up to 50%).

Adapting to novelty requires two things: first, knowledge — a set of reusable functions or programs — which LLMs have plenty of. Second, you need the ability to recombine these functions into a new program for the task at hand — a form of program synthesis. LLMs have traditionally lacked this ability, but the o series of models addresses that.

We can only speculate about the exact details of how o3 works, but its core mechanism seems to be natural language program search and execution within token space. At test time, the model searches through possible Chains of Thought (CoTs) that describe the steps to solve the task, perhaps guided by an evaluator model. DeepMind hinted in June 2023 that they were researching this very approach — it’s been a long time coming.

While single-generation LLMs struggle with novelty, o3 overcomes this by generating and executing its own programs, with the CoT itself acting as the recombined knowledge. Though this is not the only viable approach to test-time knowledge recombination (test-time training or latent space search could also work), it represents the state-of-the-art as reflected in these ARC-AGI results.

Essentially, o3 exemplifies deep learning-guided program search. The model performs test-time search over a space of "programs" (in this case, CoTs), guided by a deep learning prior (the base LLM). The reason solving an ARC-AGI task can require millions of tokens and thousands of dollars is because this search process explores many possible paths — including backtracking.

However, there are key differences between this approach and the "deep learning-guided program search" path I previously described as the best route to AGI. Notably, the programs generated by o3 are natural language instructions, not executable symbolic programs. This means two things: first, they cannot interact with reality through direct execution, and second, they rely on evaluation by another model, which could lead to errors when operating out of distribution. Moreover, the system can’t autonomously acquire the ability to generate and evaluate these programs; it depends on expert-labeled, human-generated CoT data.

It’s still unclear what the exact limitations of this new system are and how far it can scale. More testing is needed to find out. Regardless, the current performance marks a remarkable achievement and confirms that intuition-guided test-time search over program space is a powerful paradigm for building AI systems capable of adapting to arbitrary tasks.

WHAT'S NEXT?

The open-source replication of o3, facilitated by the ARC Prize competition in 2025, will be a critical step for advancing research in the field. A comprehensive analysis of o3’s strengths and weaknesses is essential to understand its scalability, identify potential bottlenecks, and forecast the capabilities that further advancements could unlock.

In addition, ARC-AGI-1 is nearing saturation. Beyond o3's impressive new score, it’s important to note that a large ensemble of low-compute Kaggle solutions now reaches 81% on the private evaluation.

To push the envelope further, we’re preparing for the release of ARC-AGI-2, which has been in development since 2022. This new version will reset the state-of-the-art, aiming to challenge AI’s current limitations with tough, high-signal evaluations.

Early testing of ARC-AGI-2 shows that it will be both useful and extremely demanding, even for o3. Alongside the launch of ARC Prize 2025, we plan to release ARC-AGI-2, with an estimated launch in late Q1.

Looking ahead, the ARC Prize Foundation will continue creating new benchmarks to direct the focus of researchers toward the toughest unsolved problems on the path to AGI. We're already working on a third-generation benchmark that completely departs from the 2019 ARC-AGI format, introducing fresh and exciting ideas.

GET INVOLVED: OPEN-SOURCE ANALYSIS

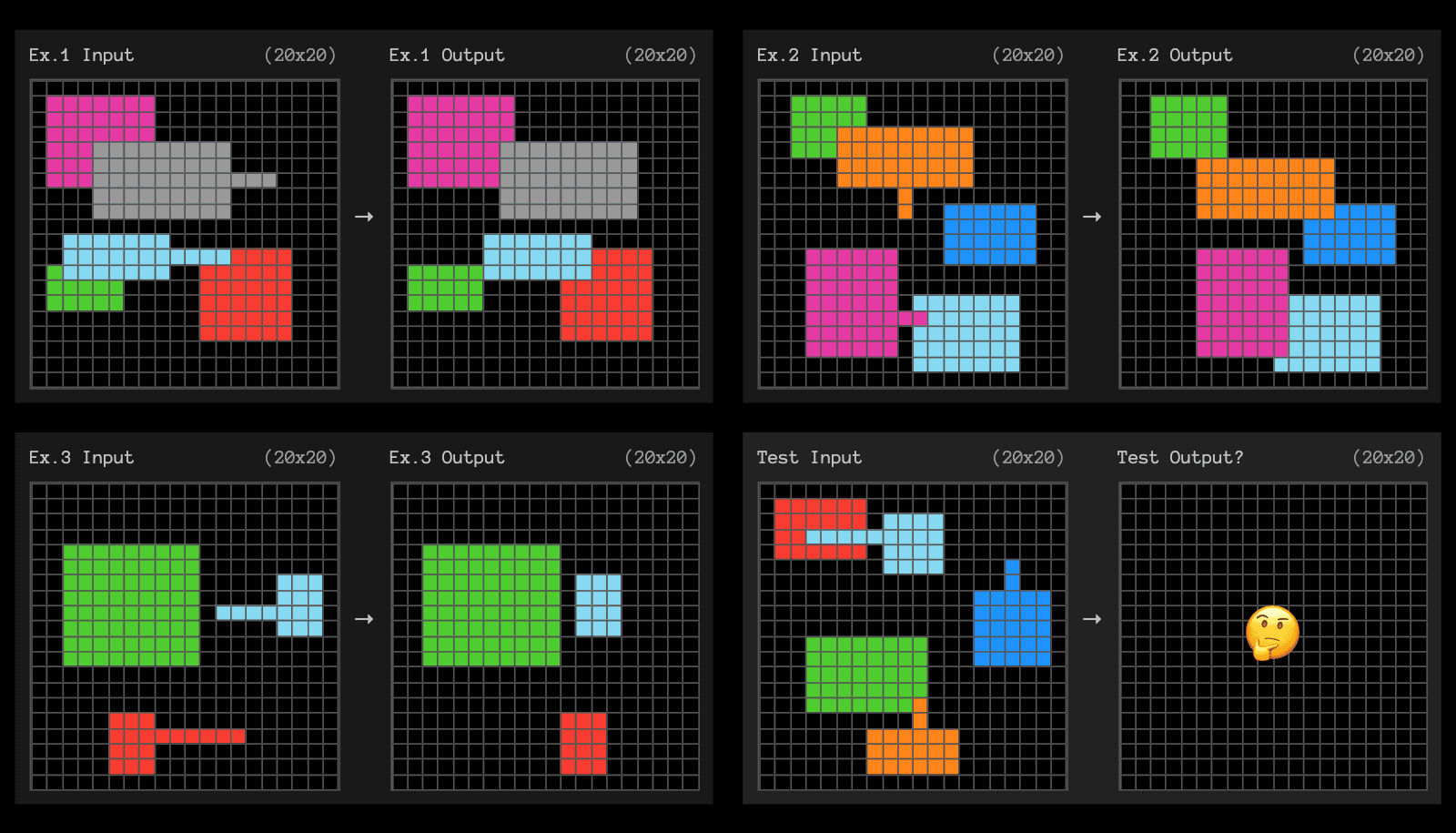

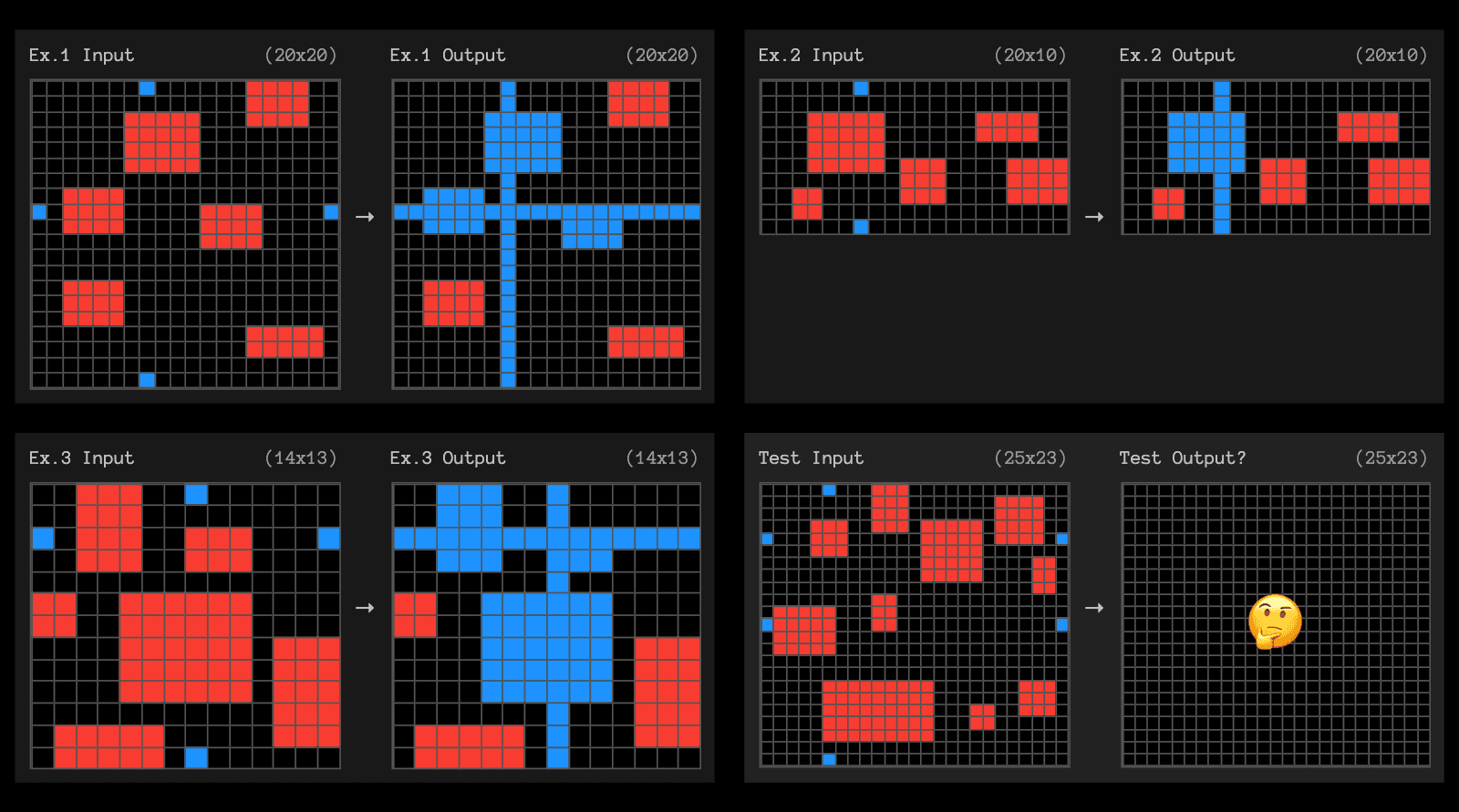

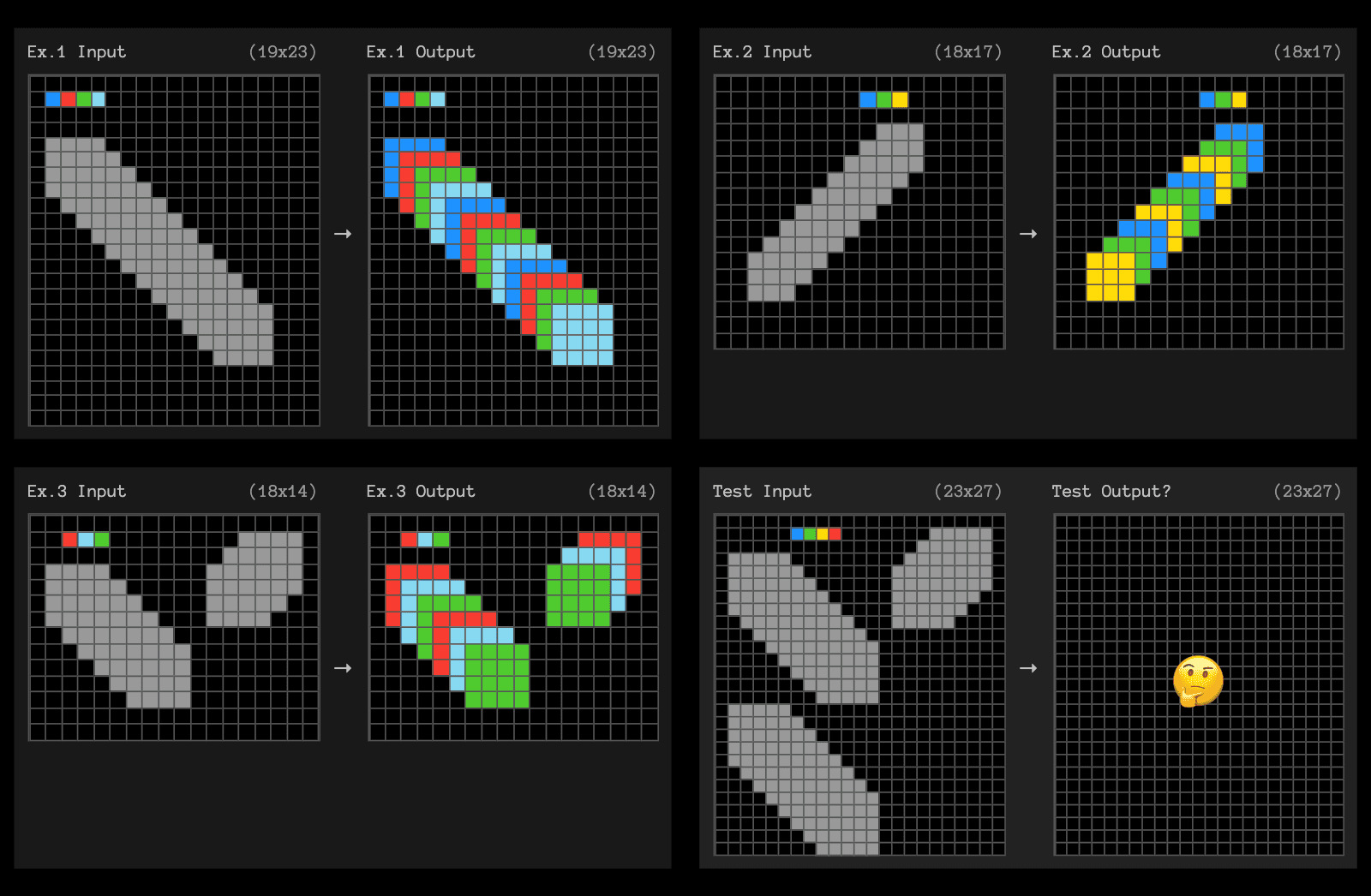

Today, we’re also releasing high-compute, o3-labeled tasks and invite your help in analyzing them. Specifically, we're keen to explore the ~9% of Public Eval tasks that o3 couldn't solve, despite significant compute, but which are still relatively straightforward for humans.

We encourage the community to help assess both solved and unsolved tasks to better understand what makes them challenging.

To kick things off, here are three examples of tasks that high-compute o3 was unable to solve.

CONCLUSIONS

To sum up – o3 represents a significant leap forward. Its performance on ARC-AGI highlights a genuine breakthrough in adaptability and generalization, in a way that no other benchmark could have made as explicit.

o3 fixes the fundamental limitation of the LLM paradigm – the inability to recombine knowledge at test time – and it does so via a form of LLM-guided natural language program search. This is not just incremental progress; it is new territory, and it demands serious scientific attention.

Press contact

Timon Harz

oneboardhq@outlook.com

Other posts

Company

About

Blog

Careers

Press

Legal

Privacy

Terms

Security