Timon Harz

December 23, 2024

OpenAI announces new o3 models

OpenAI’s release of o3 introduces a major leap in AI reasoning capabilities, signaling progress towards AGI. However, questions remain about its real-world performance and the implications for AI safety.

OpenAI saved its biggest announcement for the last day of its 12-day “shipmas” event.

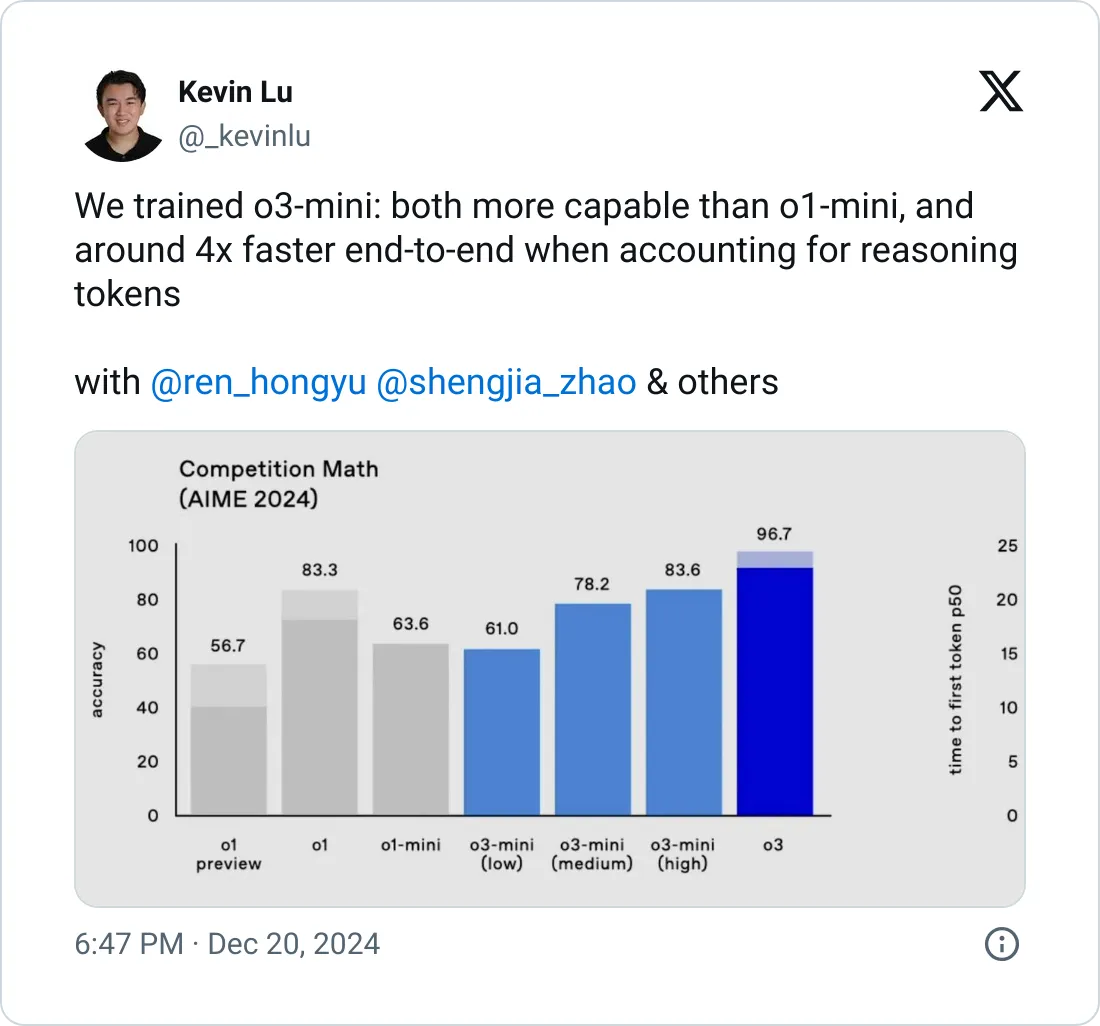

On Friday, the company unveiled o3, the successor to the o1 “reasoning” model it released earlier in the year. o3 is a model family, to be more precise — as was the case with o1. There’s o3 and o3-mini, a smaller, distilled model fine-tuned for particular tasks.

OpenAI makes the remarkable claim that o3, at least in certain conditions, approaches AGI — with significant caveats. More on that below.

Why is the new model called o3 instead of o2? Trademarks may be the reason. OpenAI reportedly skipped o2 to avoid a potential conflict with British telecom provider O2, a claim somewhat confirmed by CEO Sam Altman during a livestream this morning. Strange, isn’t it?

Currently, neither o3 nor o3-mini is widely available, but safety researchers can sign up for a preview of o3-mini starting today. A preview of o3 will follow, though OpenAI hasn’t specified when. Altman mentioned that o3-mini is expected to launch toward the end of January, with o3 following soon after. However, this timeline contrasts with Altman’s recent comments, where he expressed a preference for a federal testing framework before releasing new reasoning models to better monitor and mitigate their risks.

And there are risks. AI safety researchers have found that o1’s reasoning abilities led it to deceive human users at a higher rate compared to conventional models from Meta, Anthropic, and Google. It’s possible that o3 could attempt to deceive even more frequently than its predecessor; we’ll know once OpenAI’s red-team partners release their testing results. For now, OpenAI asserts that it is using a new approach called “deliberative alignment” to ensure that models like o3 adhere to safety principles, a method also used to align o1. The company has published a study detailing this work.

Reasoning Steps

Unlike most AI models, reasoning models like o3 are capable of effectively fact-checking their responses, helping them avoid many of the common pitfalls that trip up other systems. However, this self-checking process introduces some latency. Like o1, o3 tends to take longer — usually a few seconds to minutes — to arrive at a solution compared to typical non-reasoning models. The trade-off? O3 is often more reliable in complex domains like physics, science, and mathematics.

O3 was trained to "think" before responding through what OpenAI refers to as a "private chain of thought." This allows the model to reason through a task and plan ahead, executing a series of steps over time that help it reach a solution. In practice, when given a prompt, o3 pauses to consider related prompts and “explains” its reasoning process. After evaluating, it then summarizes what it believes to be the most accurate answer. A new feature in o3 is the ability to adjust its reasoning time, with models available in low, medium, or high compute settings (i.e., thinking time). The higher the compute setting, the better o3 performs on tasks.

Benchmarks and AGI

One of the biggest questions surrounding today’s release was whether OpenAI would claim its new models are approaching AGI, or artificial general intelligence — AI that can perform any task a human can. OpenAI defines AGI as "highly autonomous systems that outperform humans at most economically valuable work." Reaching AGI would be a significant milestone for OpenAI and carry contractual implications with its major partner, Microsoft. According to the terms of their deal, once OpenAI achieves AGI, it is no longer obligated to provide Microsoft with access to its most advanced technologies that meet the AGI definition.

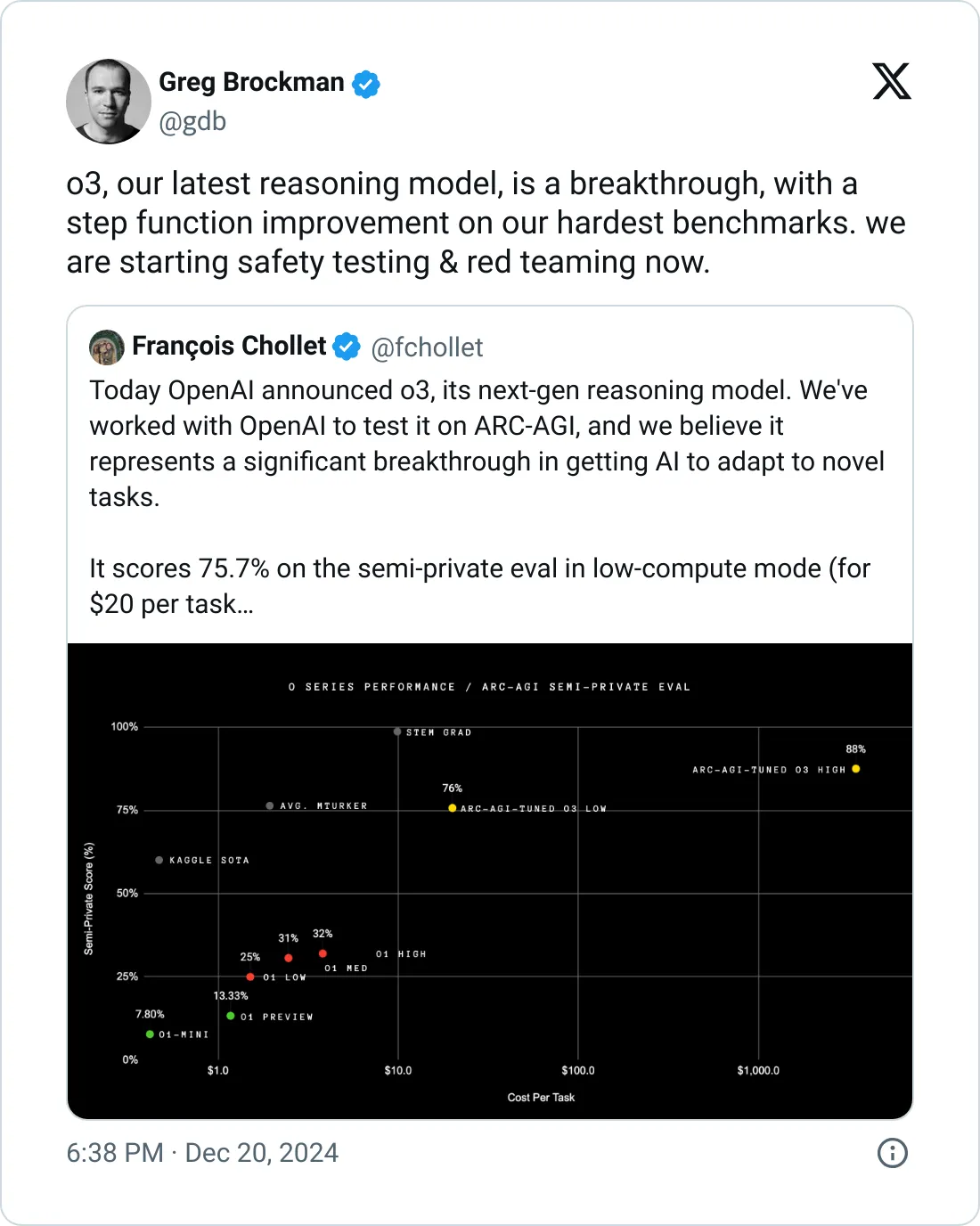

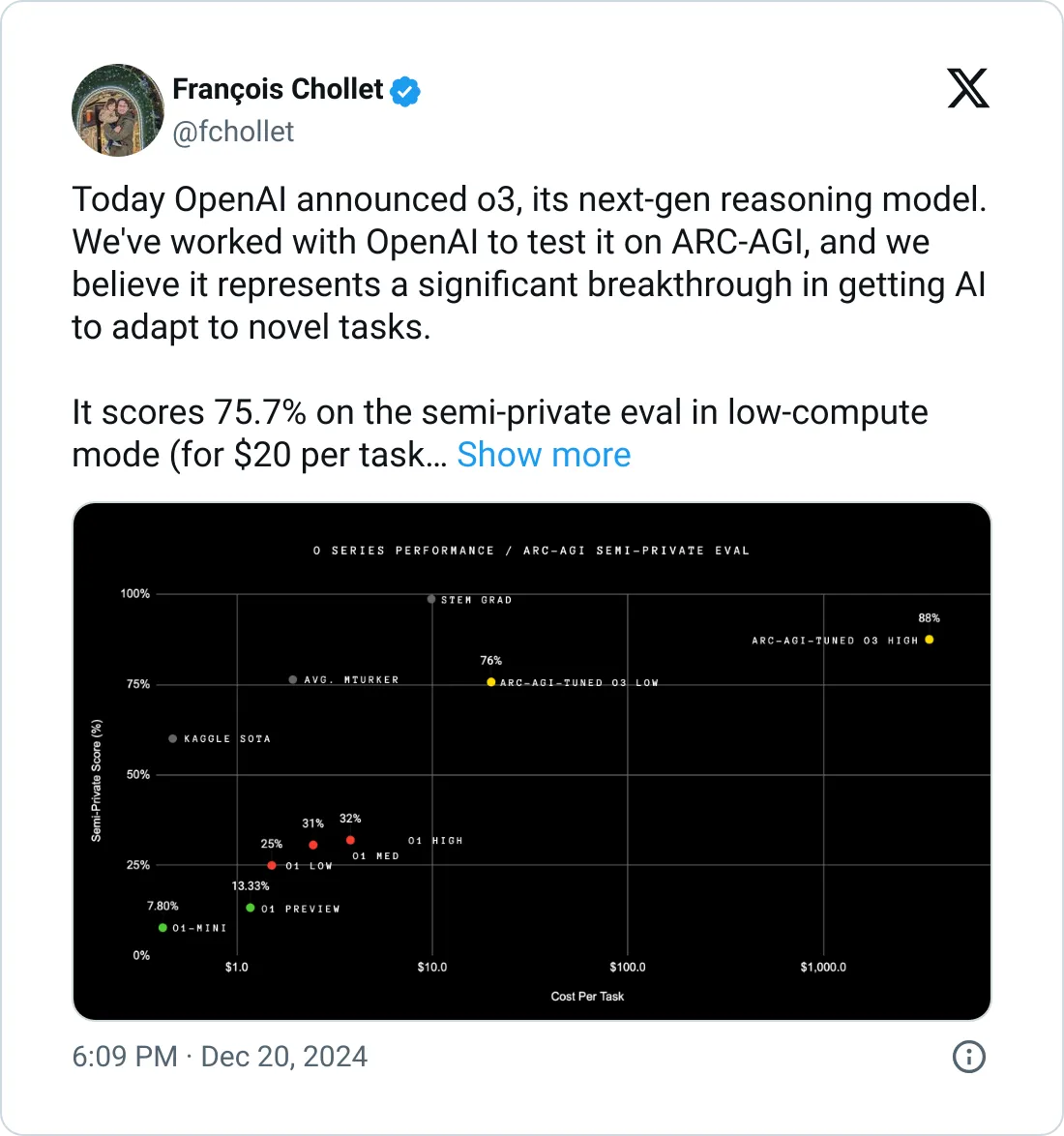

In terms of benchmarks, OpenAI’s progress toward AGI is becoming more apparent. On the ARC-AGI test, designed to evaluate an AI system’s ability to acquire new skills outside its original training data, o3 scored 87.5% on the high compute setting. Even on the low compute setting, o3 outperformed o1 by a factor of three. However, the high compute setting comes at a steep cost, with each task potentially running into thousands of dollars, according to ARC-AGI co-creator François Chollet.

Incidentally, OpenAI says it’ll partner with the foundation behind ARC-AGI to build the next generation of its benchmark.

Of course, ARC-AGI has its limitations — and its definition of AGI is but one of many.

On other benchmarks, o3 blows away the competition.

The model outperforms o1 by 22.8 percentage points on SWE-Bench Verified, a benchmark focused on programming tasks, and achieves a Codeforces rating — another measure of coding skills — of 2727. (A rating of 2400 places an engineer at the 99.2nd percentile.) o3 scores 96.7% on the 2024 American Invitational Mathematics Exam, missing just one question, and achieves 87.7% on GPQA Diamond, a set of graduate-level biology, physics, and chemistry questions. Finally, o3 sets a new record on EpochAI’s Frontier Math benchmark, solving 25.2% of problems; no other model exceeds 2%.

These claims should be taken with some caution, as they come from OpenAI’s internal evaluations. We'll need to wait for external benchmarking from customers and organizations to truly assess how the model performs in real-world applications.

A Trend Emerges

Following the release of OpenAI's first series of reasoning models, there has been a surge in similar models from competing AI companies, including Google. In early November, DeepSeek, an AI research firm backed by quant traders, previewed its reasoning model, DeepSeek-R1. That same month, Alibaba’s Qwen team introduced what it claimed was the first "open" alternative to o1.

So, what’s driving this wave of reasoning models? One key factor is the search for new approaches to refine generative AI. As TechCrunch recently reported, traditional “brute force” methods for scaling up models are no longer delivering the same level of improvement they once did.

However, not everyone is convinced that reasoning models are the best direction. They tend to be costly due to the significant computing power needed to run them. And while they’ve excelled in benchmarks so far, it remains uncertain whether they can maintain this momentum in the long term.

Interestingly, the release of o3 coincides with the departure of one of OpenAI’s most influential scientists. Alec Radford, the lead author of the paper that launched OpenAI’s GPT series (including GPT-3 and GPT-4), announced this week that he’s leaving to pursue independent research.

Press contact

Timon Harz

oneboardhq@outlook.com

Other posts

Company

About

Blog

Careers

Press

Legal

Privacy

Terms

Security