Timon Harz

December 1, 2024

Alibaba’s Marco-o1: Advancing Open-Ended AI Reasoning for Real-World Challenges

Marco-o1 by Alibaba takes AI a step further by solving complex, open-ended problems with innovative reasoning techniques. Its advanced methods like Chain-of-Thought and Monte Carlo Tree Search improve AI’s ability to handle tasks requiring creativity and nuanced problem-solving.

Introduction

Alibaba's Marco-o1 is a groundbreaking AI model that represents a significant leap forward in the field of open-ended reasoning. Developed by the MarcoPolo team, it builds on prior models like OpenAI's o1, but introduces key innovations that enhance its ability to tackle complex, ambiguous challenges, particularly in environments where traditional AI models struggle. Unlike models designed for structured tasks, Marco-o1 is optimized to generalize across diverse domains, handling tasks that require nuanced problem-solving, creativity, and cultural understanding.

One of the most remarkable features of Marco-o1 is its use of advanced techniques such as Chain-of-Thought (CoT) fine-tuning and Monte Carlo Tree Search (MCTS). These methods allow the model to navigate intricate reasoning paths and make more accurate predictions by simulating possible actions and evaluating their potential outcomes. By incorporating these approaches, Marco-o1 can handle ambiguous situations where definitive answers are not readily available, making it ideal for real-world applications. Additionally, Marco-o1 has demonstrated superior performance in tasks like machine translation, where it excels in understanding and translating colloquial expressions and nuanced meanings, outperforming other models in terms of contextual accuracy.

Marco-o1’s ability to integrate different reasoning pathways using MCTS not only enhances its problem-solving capabilities but also enables the model to perform complex tasks more effectively. Through a multi-step process, it evaluates different reasoning chains, adjusting its approach based on feedback and reflection mechanisms to continually refine its output. This dynamic approach allows Marco-o1 to tackle tasks in areas such as translation, instruction-following, and even creative problem-solving.

In summary, Marco-o1 stands as a pioneering model in the realm of open-ended reasoning, offering impressive advancements in both its technological approach and real-world applicability.

The goal of Alibaba's Marco-o1 is to tackle complex, open-ended reasoning tasks that require sophisticated problem-solving across multiple domains. Unlike traditional models, which excel at well-defined tasks with clear parameters, Marco-o1 is designed to handle ambiguity, uncertainty, and scenarios that lack predefined solutions. This model's strength lies in its ability to reason through intricate, multi-step problems by evaluating different possibilities and continuously refining its approach based on dynamic feedback loops.

At its core, Marco-o1 is built to perform high-level cognitive functions similar to human reasoning, enabling it to address tasks that involve contextual interpretation, creativity, and nuanced decision-making. This is achieved through the integration of advanced techniques such as Chain-of-Thought (CoT) fine-tuning and Monte Carlo Tree Search (MCTS). CoT fine-tuning ensures that the model can break down complex problems into smaller, manageable reasoning steps, while MCTS allows Marco-o1 to simulate various potential actions and assess their outcomes through probabilistic reasoning. By using MCTS, Marco-o1 can explore multiple reasoning pathways, improving its decision-making abilities in cases where there is no single correct answer.

Furthermore, the model is trained on a diverse set of datasets, including structured reasoning tasks, multilingual datasets, and instruction-following tasks, all of which enhance its ability to handle open-ended questions that span different domains. This versatility is a direct result of Marco-o1’s underlying design, which focuses on expanding the solution space and using reasoning chains to simulate human-like thought processes.

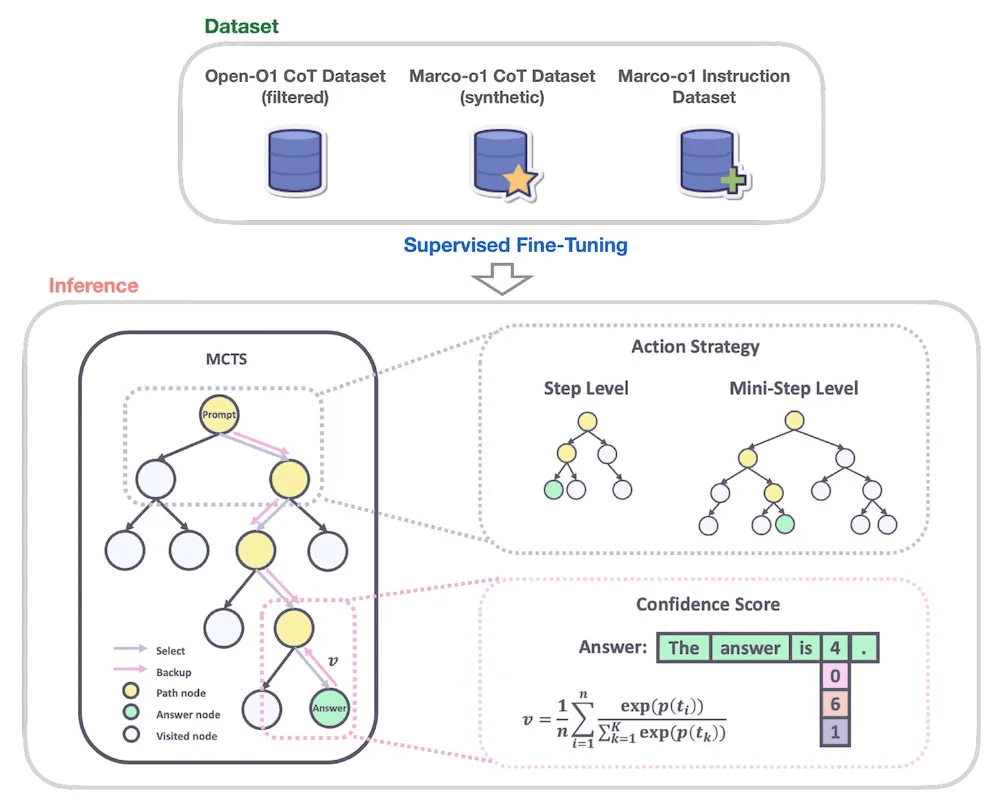

The Marco-o1 model from Alibaba introduces several innovative features, enhancing its reasoning capabilities and making it a major step forward in large reasoning models (LRMs). One of its key components is Chain-of-Thought (CoT) fine-tuning, which allows the model to generate complex reasoning pathways by systematically breaking down tasks into smaller steps. This process helps Marco-o1 achieve improved accuracy in open-ended problem-solving, particularly in scenarios where traditional models might struggle. It relies on structured reasoning datasets like the Open-O1 CoT and Marco-o1 CoT datasets to guide its logical processing.

Additionally, Marco-o1 integrates Monte Carlo Tree Search (MCTS) to explore solution spaces more effectively. MCTS is used to simulate various decision paths by evaluating potential outcomes and guiding the model towards more optimal reasoning sequences. Each node in the MCTS represents a reasoning state, and through simulated rollouts, the model evaluates the "reward" based on the confidence of its reasoning chain. This integration allows the model to tackle complex, ambiguous problems with greater efficiency by considering a wider range of possible solutions.

Marco-o1 also employs a reflection mechanism, a self-correction feature that prompts the model to rethink its conclusions when it encounters uncertainty. This helps improve its reasoning accuracy, especially in difficult tasks where the initial solution may not be optimal. Furthermore, the model’s use of mini-steps (tokens) in MCTS offers a finer granularity of reasoning, making it capable of handling nuanced problem-solving scenarios.

Through these innovative features, Marco-o1 shows great promise in a variety of domains, from translation to open-ended reasoning tasks, by leveraging advanced techniques to break down problems, simulate various outcomes, and refine its thinking in real-time.

What is Marco-o1?

Marco-o1, a cutting-edge Large Reasoning Model (LRM), is designed to enhance the problem-solving capabilities of AI by tackling complex, open-ended challenges where conventional models struggle. The core functionality of Marco-o1 revolves around its innovative reasoning techniques, which include Chain-of-Thought (CoT) fine-tuning, Monte Carlo Tree Search (MCTS), and reflection mechanisms. These elements empower the model to navigate intricate real-world scenarios by systematically generating and evaluating multiple reasoning paths, adjusting strategies as needed to arrive at the best solution.

The model utilizes MCTS, where each node represents a reasoning state, and actions correspond to LLM-generated outputs, which serve as the reasoning steps. During the "rollout" phase of MCTS, Marco-o1 continues the reasoning process until it reaches a conclusion. The paths are then scored based on confidence metrics calculated through the softmax function, which evaluates the likelihood of a token being the correct step in the reasoning chain. This probabilistic evaluation allows the model to select the most promising reasoning paths. The model can further refine its process by leveraging "mini-steps," a strategy that breaks down reasoning into smaller, more detailed units, enabling deeper exploration of the solution space.

Additionally, Marco-o1 is designed with self-reflection capabilities, allowing it to revisit previous reasoning steps to identify and correct potential mistakes. This unique feature, paired with its flexible exploration strategy, makes Marco-o1 particularly adept at solving tasks that require open-ended thinking, such as advanced translation and reasoning tasks, where traditional models may fail due to lack of nuanced understanding.

Marco-o1, Alibaba’s new large reasoning model, introduces groundbreaking advancements in handling complex, open-ended tasks. Unlike traditional models, such as OpenAI's o1, which primarily focus on structured reasoning, Marco-o1 incorporates sophisticated techniques like Chain-of-Thought (CoT) fine-tuning and Monte Carlo Tree Search (MCTS) to enhance its problem-solving capabilities. These innovations allow Marco-o1 to handle nuanced tasks involving ambiguity, where no definitive solution exists, by simulating a deeper exploration of potential answers through iterative reasoning.

A key feature distinguishing Marco-o1 from other AI models, including OpenAI's o1, is its broader generalization across domains requiring creativity, cultural insight, and open-ended problem solving. While models like o1 excel at structured environments and predefined tasks, Marco-o1 is designed for more real-world applications, such as translation tasks and understanding context, where less concrete metrics for evaluation exist. The model’s MCTS framework helps it evaluate a large solution space, guiding the AI through diverse reasoning chains and improving its decision-making over time.

Marco-o1’s integration of these advanced techniques has led to notable improvements in tasks like machine translation, especially in capturing colloquial nuances. For instance, it successfully translates slang and idiomatic expressions more accurately than traditional models like Google Translate. This ability to grasp contextual meaning is particularly important in applications requiring flexibility and adaptation across languages and domains, setting Marco-o1 apart from more rigid counterparts like o1.

In conclusion, while OpenAI's o1 remains a powerful tool for structured tasks, Marco-o1 expands the boundaries of AI's problem-solving abilities with its open-ended reasoning framework, making it better suited for complex, ambiguous challenges across various industries.

Alibaba's Marco-o1 model is designed to handle complex, open-ended problems—those lacking clear-cut solutions or well-defined evaluation metrics. This is a critical advancement for AI, enabling models to address real-world scenarios that require reasoning beyond straightforward, structured tasks. Marco-o1 incorporates techniques like Chain-of-Thought (CoT) fine-tuning and Monte Carlo Tree Search (MCTS) to improve problem-solving in ambiguous contexts.

In traditional AI systems, ambiguity often presents a challenge, as these models excel in environments where inputs and expected outcomes are clear. However, in real-world problems—such as those found in areas like language translation, decision-making, or strategic planning—ambiguity is unavoidable. Marco-o1 leverages advanced reasoning techniques to explore multiple potential solutions simultaneously, refining its responses based on iterative feedback and deeper exploration of possible outcomes.

A key feature of Marco-o1’s reasoning capabilities is its ability to simulate multiple decision paths using MCTS. This technique explores different sequences of actions or solutions by evaluating numerous potential outcomes, allowing the model to navigate complex situations that lack predefined answers. By adjusting the "granularity" of these decisions, Marco-o1 can balance between computational efficiency and accuracy, ensuring that it addresses even the most nuanced aspects of a problem.

Through these innovations, Marco-o1 is pushing the boundaries of what AI can achieve in real-world applications, particularly in domains that require creativity, cultural understanding, and the ability to adapt to evolving scenarios.

Innovative Techniques Behind Marco-o1

Chain-of-thought (CoT) fine-tuning is a powerful technique in large language models (LLMs) that helps improve their ability to break down complex problems into smaller, manageable steps. This process allows a model to follow a sequence of reasoning, making it particularly valuable for tasks that require multi-step logic, such as mathematical problem-solving, decision-making, and open-ended reasoning. CoT fine-tuning essentially involves training the model on datasets where each task is decomposed into a series of intermediate steps. These steps guide the model in reasoning through problems in a structured manner, improving its overall problem-solving accuracy.

For example, in models like Marco-o1, CoT fine-tuning is paired with methods like Monte Carlo Tree Search (MCTS), which further refines the model's ability to explore and evaluate multiple reasoning pathways. In the MCTS framework, each node in the tree represents a distinct reasoning state, and the possible actions (steps or mini-steps) are determined by the outputs of the LLM. The reasoning is expanded by evaluating different pathways through the use of reward scores, which are calculated based on the confidence in each reasoning step. By incorporating this approach, the model can generate a chain of reasoning that is progressively refined, allowing it to tackle even the most complex problem-solving tasks.

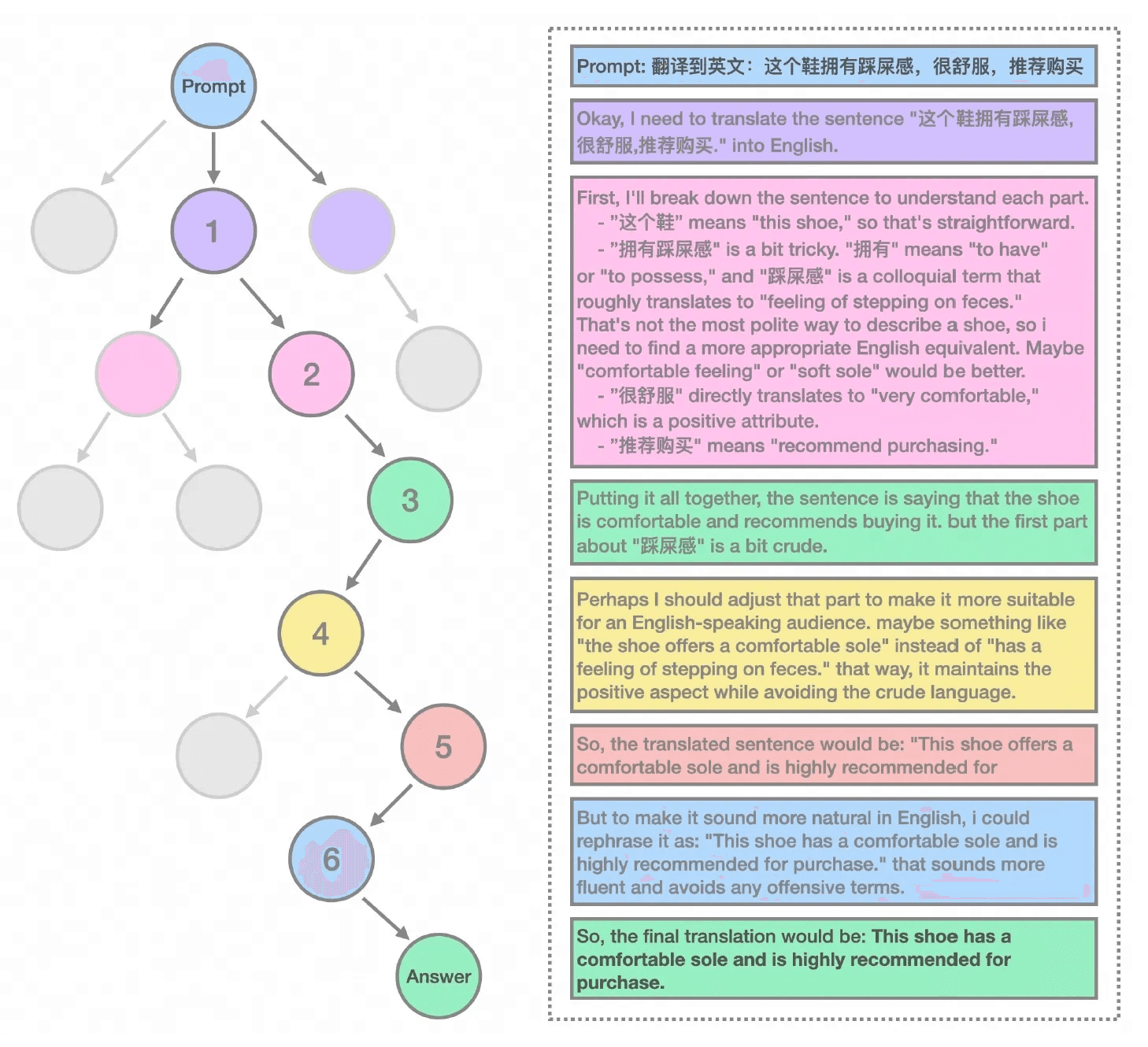

However, the primary objective of Marco-o1 was to address the challenges of reasoning in open-ended scenarios. To this end, the researchers tested the model on translating colloquial and slang expressions, a task that requires understanding subtle nuances of language, culture and context. The experiments showed that Marco-o1 was able to capture and translate these expressions more effectively than traditional translation tools. For instance, the model correctly translated a colloquial expression in Chinese, which literally means, “This shoe offers a stepping-on-poop sensation”, into the English equivalent, “This shoe has a comfortable sole.” The reasoning chain of the model shows how it evaluates different potential meanings and arrives at the correct translation.

This paradigm can prove to be useful for tasks such as product design and strategy, which require deep and contextual understanding and do not have well-defined benchmarks and metrics.

In practice, CoT fine-tuning trains the model not only to arrive at the correct answer but also to reason through the problem in a way that mirrors human logical processes. This method is especially effective when the problem-solving process is not linear and requires considering multiple perspectives or iterating on possible solutions. Additionally, models like Marco-o1 have expanded the utility of CoT by experimenting with different granularity levels in their reasoning steps, such as "mini-steps," which enable the model to explore more detailed and nuanced solutions. These innovations are vital in ensuring that LLMs can generalize their reasoning across a variety of domains, from coding to real-world decision-making.

This technique plays a critical role in enhancing the model's capacity for open-ended problem-solving, as it systematically breaks down each complex task into digestible reasoning units, ensuring accuracy and completeness in its output.

Monte Carlo Tree Search (MCTS) is a key component of Alibaba's Marco-o1 model, significantly enhancing its decision-making capabilities in complex and uncertain environments. MCTS is particularly useful for problems involving sequential decision-making under uncertainty, where the outcomes are not deterministic and must be approximated through simulations. This method iteratively explores potential outcomes by expanding a tree of decision nodes, balancing exploration of new actions and exploitation of known promising paths. In the context of Marco-o1, MCTS enables the model to optimize open-ended reasoning, exploring multiple possible solutions and adjusting strategies in real-time.

The strength of MCTS lies in its ability to handle uncertainty by sampling a large number of possible future states, each weighted by its potential outcome. Marco-o1 leverages this by using Monte Carlo simulations to guide its reasoning processes, allowing the model to make informed decisions even when faced with incomplete or ambiguous information. This aligns with Marco-o1’s emphasis on generalizing to broader problem domains where rewards are not immediately obvious or easily quantifiable. For example, in tasks that require abstract reasoning or the synthesis of information from multiple sources, MCTS helps Marco-o1 to dynamically adjust its approach, continuously refining its predictions as new data or scenarios emerge.

By incorporating MCTS, Marco-o1 is not only able to assess a wide range of possibilities but can also optimize its reasoning strategies, making it a robust tool for complex, real-world applications where traditional models might struggle with the breadth and variability of decision-making challenges.

Applications and Use Cases

Marco-o1's advanced capabilities make it a promising model for applications across creative tasks, translation, and cultural understanding. In creative tasks, the model can significantly enhance content generation by leveraging its reasoning capabilities. Fine-tuned with Chain-of-Thought (CoT) data and Monte Carlo Tree Search (MCTS), Marco-o1 excels in producing creative solutions that explore multiple potential outcomes, enhancing idea generation, brainstorming, and conceptualization tasks. This can be particularly useful in industries like marketing, advertising, and entertainment, where generating diverse, impactful content is key.

In translation, Marco-o1 pushes the boundaries of conventional machine translation by applying its reasoning strategies to produce contextually accurate and culturally relevant translations. The model has demonstrated improved performance in translating idiomatic expressions and slang, such as converting the literal phrase “This shoe offers a stepping-on-poop sensation” into a more culturally nuanced “This shoe has a comfortable sole”. This ability to grasp colloquial nuances is vital for professional translation and transcreation, where it is not just the language but the cultural resonance of the message that matters.

Furthermore, Marco-o1’s potential extends to cultural understanding, where its innovative reasoning framework allows it to bridge cultural gaps in both content creation and communication. By tailoring translations or creative outputs to maintain emotional and cultural impact, Marco-o1 can support businesses and individuals in creating content that resonates deeply with diverse audiences, making it a valuable tool for international marketing and localization.

In sum, Marco-o1's blend of reasoning abilities and fine-tuning makes it a highly effective tool for tackling complex challenges in creative work, translation, and fostering a deeper understanding of cultural context.

Marco-o1’s advancements in reasoning and problem-solving are particularly valuable in tasks that require nuanced understanding, enabling it to excel in complex applications like creative content generation, translation, and strategic decision-making.

Creative Content Generation: For example, Marco-o1 is capable of producing highly creative content through its Monte Carlo Tree Search (MCTS) integration, which allows the model to explore a multitude of creative pathways before selecting the optimal solution. This is crucial in fields like marketing, where the creation of compelling, culturally resonant advertisements requires exploring various conceptualizations. In an advertising context, a brand message might be adjusted to resonate with different cultural backgrounds by utilizing Marco-o1’s ability to generate diverse outcomes. This method allows the model to suggest alternative messaging strategies based on cultural references and emotional resonance, ultimately leading to more personalized and impactful content.

Translation and Transcreation: In the realm of translation, Marco-o1 enhances understanding by applying reasoning methods that adapt translations to the emotional and cultural tone of the original content. A key challenge in machine translation involves maintaining the integrity of idiomatic expressions. For instance, translating a phrase like “Time is money” into languages with different cultural connotations requires not only linguistic accuracy but an understanding of the metaphorical significance behind it. Marco-o1’s use of Chain-of-Thought (CoT) fine-tuning ensures that the translation process isn’t just literal but rather captures the deeper meaning and emotional context. This approach ensures that translations are not only accurate but culturally sensitive and resonant.

Strategic Decision-Making: Marco-o1 also showcases its prowess in decision-making tasks that involve high levels of uncertainty or abstract reasoning. In scenarios where a company must decide between multiple strategies—say, for expanding into new markets—the model can simulate and evaluate the outcomes of each decision using MCTS. It calculates the most probable successful strategy by testing various actions, considering both short-term results and long-term implications. This allows Marco-o1 to provide optimal recommendations in scenarios like business strategy, risk analysis, or policy-making, where traditional models may fall short due to the complexity and variability of the decision space.

By integrating techniques like CoT and MCTS, Marco-o1 not only excels at understanding complex tasks but also adapts and improves its approach to problem-solving over time, making it a robust tool for applications that demand precision, creativity, and cultural sensitivity.

Advantages Over Existing Models

Marco-o1 significantly outperforms previous models in its ability to reason and tackle ambiguous problems, especially by leveraging its advanced reasoning mechanisms. Key advancements include the integration of Monte Carlo Tree Search (MCTS) with large language models (LLMs), which refines the model’s decision-making by simulating various reasoning pathways and selecting the most promising ones. This process enables Marco-o1 to efficiently explore complex problem spaces and address ambiguity by considering multiple potential solutions before settling on the most reliable one.

In addition, Marco-o1 uses Chain-of-Thought (CoT) fine-tuning, which helps the model break down problems into smaller, more manageable steps, allowing it to reason through complex, open-ended tasks effectively. The inclusion of reflection mechanisms further enhances its performance. By prompting the model to reassess its reasoning after each thought cycle ("Wait! Maybe I made some mistakes!"), it ensures that less confident answers are revisited, leading to higher accuracy in challenging situations.

These combined strategies allow Marco-o1 to handle ambiguous or vague problem domains, such as those encountered in natural language processing tasks like machine translation, where the meaning can vary based on context. For example, it has demonstrated superior performance in translating complex idiomatic expressions, a task where previous models often struggle due to a lack of nuanced understanding.

Overall, Marco-o1's ability to navigate ambiguity and reason effectively sets it apart from earlier models, particularly in tasks requiring open-ended solutions. The integration of advanced reasoning mechanisms like MCTS and reflection positions it as a formidable tool in both structured and unstructured problem-solving environments.

Marco-o1, developed by Alibaba, significantly enhances the AI landscape by focusing on solving open-ended, complex problems, where traditional models like OpenAI's O1 face limitations. Marco-o1 advances beyond standard problem-solving tasks, incorporating techniques such as Chain-of-Thought (CoT) fine-tuning and Monte Carlo Tree Search (MCTS). These innovations aim to improve reasoning abilities and efficiency in ambiguous, real-world scenarios.

Accuracy Improvements

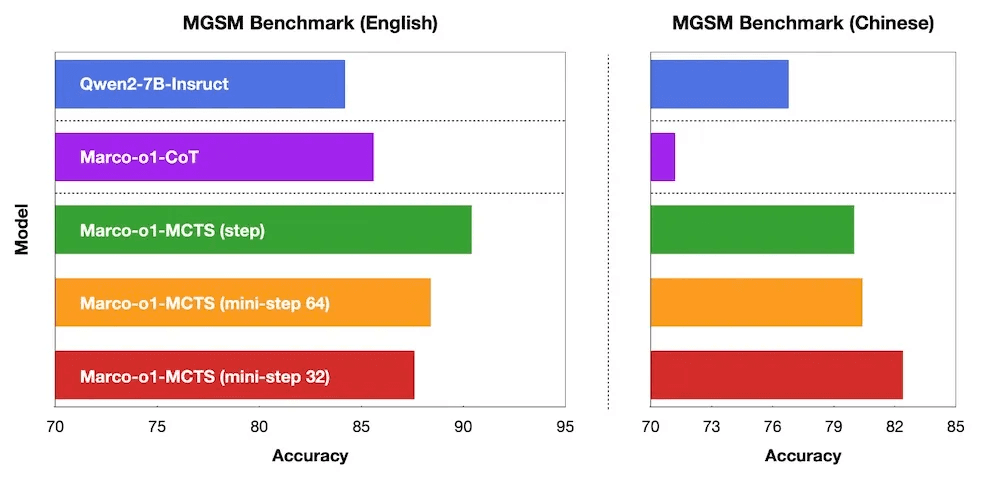

Marco-o1 introduces notable improvements in accuracy when dealing with complex reasoning tasks. Unlike its predecessors, which excel in highly structured problems, Marco-o1 excels in tasks where clear metrics are absent. This is achieved by leveraging advanced techniques like CoT, which allows the model to break down complex tasks into manageable steps, and MCTS, which optimizes decision-making in uncertain conditions. These methods enable Marco-o1 to make more informed and accurate decisions, especially in environments lacking clear-cut solutions.

Problem-Solving Efficiency

The efficiency of Marco-o1 in problem-solving stems from its ability to generalize across multiple domains. By focusing not only on well-defined tasks (like coding or mathematics) but also on creative and culturally nuanced problems, Marco-o1 can tackle a broader range of challenges. The integration of CoT fine-tuning enhances its adaptability, enabling the model to learn from a diverse set of tasks and improve its efficiency as it encounters more complex scenarios. Moreover, the incorporation of Monte Carlo Tree Search further accelerates the problem-solving process by prioritizing the most promising solutions and minimizing computational overhead.

Handling Complex Scenarios

Marco-o1's ability to handle ambiguity and complex, real-world scenarios is a defining feature. By focusing on "open-ended" problems, where the solution space is not well-defined, the model shows greater resilience and versatility. This is especially relevant for tasks that require cultural understanding, creative thinking, or dealing with non-standardized problems. The enhanced reasoning mechanisms allow Marco-o1 to explore possible solutions iteratively and refine its approach, making it highly effective in domains where traditional AI models would struggle.

In conclusion, Marco-o1 represents a significant leap in AI's ability to handle complex, open-ended tasks. Its improved accuracy, enhanced problem-solving efficiency, and better handling of ambiguous scenarios make it a strong competitor to other large reasoning models like OpenAI's O1.

Challenges and Future Directions

The Marco-o1 model faces a number of challenges related to scalability and generalizability, particularly when applied across a wide range of domains. One key issue is its ability to adapt to diverse, real-world applications outside the specific training environment. For example, despite its advanced reasoning capabilities, Marco-o1 may encounter difficulties in handling domain-specific knowledge that was not part of its initial training data. This problem is common across large models, which often struggle with areas that require fine-tuned expertise or a deep understanding of specialized topics, such as medical or legal fields.

Moreover, scalability remains a concern when deploying Marco-o1 across different platforms and infrastructures. While it shows promise in high-performance environments with ample computational resources, its performance may degrade on less powerful devices or cloud systems with limited memory and processing power. As the model scales, maintaining low latency and handling larger datasets becomes a challenge, especially when it comes to fine-tuning or retraining on new, dynamic data.

Additionally, generalizability across domains is also tested when models like Marco-o1 are deployed in environments that require real-time interaction with changing data. The model may need additional layers of customization or retraining to maintain its efficacy. This is especially problematic when considering multi-modal scenarios where the model needs to integrate and synthesize information from diverse data types, such as text, images, and videos, requiring more robust architectures and training protocols.

To overcome these hurdles, continued improvements in model architecture and training strategies, including better domain adaptation techniques and more efficient scaling methods, are necessary for Marco-o1's broader applicability.

The future potential of Alibaba’s Marco-o1 model appears highly promising, especially when considering its evolving capabilities in real-world applications. With its advanced integration of reasoning mechanisms, Marco-o1 represents a new frontier in large reasoning models (LRMs). Its use of Monte Carlo Tree Search (MCTS) for guiding the reasoning process, coupled with extensive datasets designed to refine its structured and nuanced decision-making, makes it well-suited for complex problem-solving tasks across diverse domains, from machine translation to autonomous decision-making.

One of the key areas where Marco-o1 shows future promise is in language translation. While traditional translation tools often struggle with colloquial expressions or context-heavy language, Marco-o1 has demonstrated an ability to accurately translate phrases that require an understanding of local idioms or complex contexts, outperforming existing models like Google Translate in some cases. This capability opens doors for more advanced, context-aware translation systems that can bridge cultural and linguistic gaps with greater precision, an asset in both international business and real-time communication technologies.

Moreover, Marco-o1’s architecture is primed for multimodal applications, where reasoning over multiple types of data (e.g., images, text, sound) will be crucial. Its approach to combining reasoning with reinforcement learning, and the upcoming integration of Reward Models like Outcome and Process Reward Modeling, suggests that Marco-o1 could significantly improve autonomous systems like self-driving vehicles or robotic assistants. These systems require not only accurate data interpretation but also the ability to make high-confidence decisions in uncertain environments, a core strength of Marco-o1’s decision-making process.

Further, in sectors such as healthcare, where AI is increasingly leveraged for diagnostics and treatment planning, Marco-o1’s ability to reason through complex scenarios and handle ambiguous or partial data could be revolutionary. The integration of large-scale reasoning with domain-specific datasets could enable Marco-o1 to assist in diagnosing rare diseases, creating personalized treatment plans, and even predicting patient outcomes based on historical data.

In conclusion, the real-world applications of Marco-o1 are far-reaching. Its advanced reasoning capabilities combined with cutting-edge techniques in model optimization position it to potentially transform industries that rely on high-stakes, nuanced decision-making, such as legal, finance, and healthcare. As further optimizations are made—particularly in reinforcement learning and the integration of multimodal capabilities—the model could redefine how AI interacts with human knowledge, reasoning, and decision-making in complex scenarios.

Conclusion

Marco-o1 is an advanced language model developed by Alibaba that significantly pushes the boundaries of AI’s reasoning abilities. A standout feature of Marco-o1 is its integration of Monte Carlo Tree Search (MCTS), which enhances its decision-making process by simulating various potential outcomes, allowing it to reason in a more structured and context-aware manner than traditional models. This addition helps Marco-o1 navigate complex problem-solving tasks, making it particularly effective in domains like machine translation and autonomous decision-making, where nuanced understanding is critical.

Additionally, Marco-o1's ability to handle multimodal inputs—combining text, images, and other forms of data—positions it as a highly versatile model. This multimodal capability is key for applications in areas such as healthcare, autonomous systems, and real-time communication, where it can reason across multiple data types to make informed decisions. The model's use of advanced reinforcement learning and Reward Models, like Outcome and Process Reward Modeling, further strengthens its potential for real-world applications, particularly in unpredictable environments where fast, reliable decision-making is crucial.

In conclusion, Marco-o1's advancements represent a significant step forward in AI's ability to reason and make decisions in a more human-like manner. Its capabilities are pushing the envelope in various sectors, from healthcare diagnostics to multimodal AI systems, ensuring that AI models not only perform tasks but also understand, interpret, and act on complex data in real-world settings. These enhancements set the stage for Marco-o1 to play a pivotal role in the future of AI-driven technologies.

The innovations embedded within the Marco-o1 model, such as its reasoning capabilities powered by Monte Carlo Tree Search (MCTS) and advanced reinforcement learning strategies, are setting the stage for a revolution in AI's capacity to tackle complex problem-solving tasks. These innovations go beyond merely answering questions or performing specific tasks; they push the model towards a more dynamic, context-aware decision-making process. With Marco-o1, AI can better navigate environments that require multi-step reasoning, akin to human-like thought processes, while considering both short-term outcomes and long-term consequences. This enhancement significantly impacts industries like autonomous driving, real-time medical diagnostics, and legal analysis, where decisions need to be made under uncertainty and often with incomplete or ambiguous data.

Furthermore, the introduction of multimodal reasoning—where Marco-o1 processes and integrates data from different modalities like text, images, and sensory input—introduces new possibilities for creative problem-solving. AI can now handle complex tasks that require the synthesis of information across diverse formats, offering new approaches to creative fields such as art generation, design, and multimedia content creation. As AI systems gain the ability to reason through multi-faceted, real-world problems and synthesize diverse inputs, the potential for creative AI to contribute to artistic and scientific endeavors expands significantly.

These advancements signal a broader shift in AI's capabilities, highlighting a movement toward more generalized intelligence. Rather than being confined to narrow, pre-programmed tasks, future AI models like Marco-o1 offer the potential for highly adaptable, autonomous problem-solvers that can continuously learn, refine their decision-making processes, and create novel solutions to complex challenges. This evolution is not only transforming industries but also reimagining what AI can achieve in terms of problem-solving and creative contributions, ultimately leading us towards AI systems capable of handling the intricacies of human-level cognition in both structured and open-ended domains.

Press contact

Timon Harz

oneboardhq@outlook.com

Other posts

Company

About

Blog

Careers

Press

Legal

Privacy

Terms

Security